generator

Generate LookML.

lookml-generator

LookML Generator for Glean and Mozilla Data.

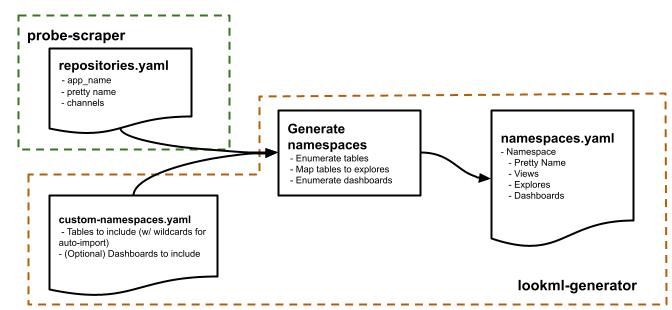

The lookml-generator has two important roles:

- Generate a listing of all Glean/Mozilla namespaces and their associated BigQuery tables

- From that listing, generate LookML for views, explores, and dashboards and push those to the Look Hub project

Generating Namespace Listings

At Mozilla, a namespace is a single functional area that is represented in Looker with (usually) one model*.

Each Glean application is self-contained within a single namespace, containing the data from across that application's channels.

We also support custom namespaces, which can use wildcards to denote their BigQuery datasets and tables. These are described in custom-namespaces.yaml.

* Though namespaces are not limited to a single model, we advise it for clarity's sake.

Adding Custom Namespaces

Custom namespaces need to be defined explicitly in custom-namespaces.yaml. For each namespace views and explores to be generated need to be specified.

Make sure the custom namespaces is _not_ listed in namespaces-disallowlist.yaml.

Once changes have been approved and merged, the lookml-generator changes can get deployed.

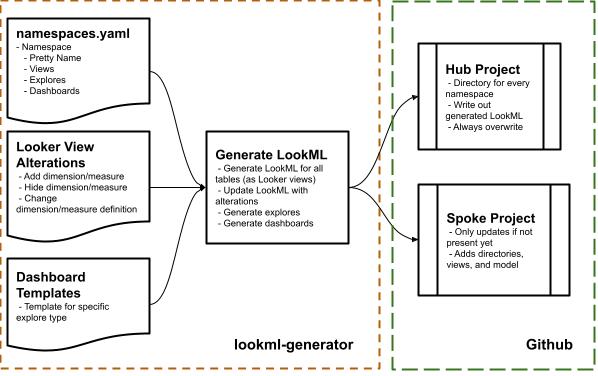

Generating LookML

Once we know which tables are associated with which namespaces, we can generate LookML files and update our Looker instance.

Lookml-generator generates LookML based on both the BigQuery schema and manual changes. For example, we would want to add city drill-downs for all country fields.

Pushing Changes to Dev Branches

In addition to pushing new lookml to the main branch, we reset the dev branches to also

point to the commit at main. This only happens during production deployment runs.

To automate this process for your dev branch, add it to this file.

You can edit that file in your browser. Open a PR and tag data-looker for review.

You can find your dev branch by going to Looker, entering development mode, opening the looker-hub

project, clicking the "Git Actions" icon, and finding your personal branch in the "Current Branch" dropdown.

Setup

Ensure Python 3.11+ is available on your machine (see this guide for instructions if you're on a mac and haven't installed anything other than the default system Python.)

You will also need the Google Cloud SDK with valid credentials. After setting up the Google Cloud SDK, run:

gcloud config set project moz-fx-data-shared-prod

gcloud auth login --update-adc

Install requirements in a Python venv

python3.11 -m venv venv/

venv/bin/python -m pip install --no-deps -r requirements.txt

Update requirements when they change with pip-sync

venv/bin/pip-sync

Setup pre-commit hooks

venv/bin/pre-commit install

Run unit tests and linters

venv/bin/pytest

Run integration tests

venv/bin/pytest -m integration

Note that the integration tests require a valid login to BigQuery to succeed.

Testing generation locally

You can test namespace generation by running:

./bin/generator namespaces

To generate the actual lookml (in looker-hub), run:

./bin/generator lookml

Container Development

Most code changes will not require changes to the generation script or container.

However, you can test it locally. The following script will test generation, pushing

a new branch to the looker-hub repository:

export HUB_BRANCH_PUBLISH="yourname-generation-test-1"

export GIT_SSH_KEY_BASE64=$(cat ~/.ssh/id_rsa | base64)

make build && make run

Deploying new lookml-generator changes

lookml-generator runs daily to update the looker-hub and looker-spoke-default code. Changes

to the underlying tables should automatically propogate to their respective views and explores.

Airflow updates the two repositories each morning.

If you need your changes deployed quickly, wait for the container to build after you merge to

main, and re-run the task in Airflow (lookml_generator, in the probe_scraper DAG).

generate Command Explained - High Level Explanation

When make run is executed a Docker container is spun up using the latest lookml-generator Docker image on your machine and runs the generate script using configuration defined at the top of the script unless overridden using environment variables (see the Container Development section above).

Next, the process authenticates with GitHub, clones the looker-hub repository, and creates the branch defined in the HUB_BRANCH_PUBLISH config variable both locally and in the remote. Then it proceeds to checkout into the looker-hub base branch and pulls it from the remote.

Once the setup is done, the process generates namespaces.yaml and uses it to generate LookML code. A git diff is executed to ensure that the files that already exist in the base branch are not being modified. If changes are detected then the process exists with an error code. Otherwise, it proceeds to create a commit and push it to the remote dev branch created earlier.

When following the Container Development steps, the entire process results in a dev branch in looker-hub with brand new generated LookML code which can be tested by going to Looker, switching to the "development mode" and selecting the dev branch just created/updated by this command. This will result in Looker using the brand new LookML code just generated. Otherwise, changes merged into main in this repo will become available on looker-hub main when the scheduled Airflow job runs.

namespaces.yaml

We use namespaces.yaml as the declarative listing of the Looker namespaces generated by this repository.

Each entry in namespaces.yaml represents a namespace, and has the following properties:

owners(string): The owners are the people who will have control over the associated Namespace folder in Looker. It is up to them to decide which dashboards to "promote" to their shared folder.pretty_name(string): The pretty name is used in most places where the namespace's name is seen, e.g. in the explore drop-down and folder name.glean_app(bool): Whether or not this namespace represents a Glean Application.connection(optional string): The database connection to use, as named in Looker. Defaults totelemetry.views(object): The LookML View files that will be generated. More detailed info below.explores(object): The LookML Explore files that will be generated. More detailed info below.

views

Each View entry is actually a LookML view file that will be generated. Each LookML View file can contain multiple Looker Views; the idea here is that these views are related and used together. By convention, the first view in the file is the base view (i.e. associated join views folllow after the explore containing the base dimension and metrics).

type: The type of the view, e.g.glean_ping_view.tables: This field is used in a few ways, depending on the associated View type.

For GleanPingView and PingView, tables represents all of the associated channels for that view. Each table will have a channel and table entry. Only a single view will be created in the LookML File.

tables:

- channel: release

table: mozdata.org_mozilla_firefox.metrics

- channel: nightly

table: mozdata.org_mozilla_fenix.metrics

For ClientCountView and GrowthAccountingView, tables will have a single entry, with the name of the table the Looker View is based off of. Only a single Looker View will be created.

tables:

- table: mozdata.org_mozilla_firefox.baseline_clients_last_seen

For FunnelAnalysisView, only the first list entry is used; inside that entry, each value represents a Looker View that is created. The key is the name of the view, the value is the Looker View or BQ View it is derived from.

In the following example, 4 views will be created in the view file: funnel_analysis, event_types, event_type_1 and event_type_2.

tables:

- funnel_analysis: events_daily_table

event_types: `mozdata.glean_app.event_types`

event_type_1: event_types

event_type_2: event_types

explores

Each Explore entry is a single file, sometimes containing multiple explores within it (mainly for things like changing suggestions).

type- The type of the explore, e.g.growth_accounting_explore.views- The views that this is based on. Generally, the allowed keys here are:base_view: The base view is the one we are basing this Explore on, usingview_name.extended_view*: Any views we include in thebase_vieware added as these. It could be one (extended_view) or multiple (extended_view_1).joined_view*: Any other view we are going to join to this one. _This is only required if the joined view is not defined in the same view file asbase_view._

It may not necessarily be desirable to list all of the views and explores in the namespace.yaml (e.g. suggest explores specific to a view). In these cases, it is useful to adopt the convention where the first view is the primary view for the explore.

1"""Generate LookML. 2 3.. include:: ../README.md 4.. include:: ../architecture/namespaces_yaml.md 5""" 6 7__docformat__ = "restructuredtext" 8 9import sys 10import warnings 11 12import click 13from google.auth.exceptions import DefaultCredentialsError 14from google.cloud import bigquery 15 16from .lookml import lookml 17from .namespaces import namespaces 18from .spoke import update_spoke 19 20 21def is_authenticated(): 22 """Check if the user is authenticated to GCP.""" 23 try: 24 bigquery.Client() 25 except DefaultCredentialsError: 26 return False 27 return True 28 29 30def cli(prog_name=None): 31 """Generate and run CLI.""" 32 if not is_authenticated(): 33 print( 34 "Authentication to GCP required. Run `gcloud auth login --update-adc` " 35 "and check that the project is set correctly." 36 ) 37 sys.exit(1) 38 39 commands = { 40 "namespaces": namespaces, 41 "lookml": lookml, 42 "update-spoke": update_spoke, 43 } 44 45 @click.group(commands=commands) 46 def group(): 47 """CLI interface for lookml automation.""" 48 49 warnings.filterwarnings( 50 "ignore", 51 "Your application has authenticated using end user credentials", 52 module="google.auth._default", 53 ) 54 55 group(prog_name=prog_name)

22def is_authenticated(): 23 """Check if the user is authenticated to GCP.""" 24 try: 25 bigquery.Client() 26 except DefaultCredentialsError: 27 return False 28 return True

Check if the user is authenticated to GCP.

31def cli(prog_name=None): 32 """Generate and run CLI.""" 33 if not is_authenticated(): 34 print( 35 "Authentication to GCP required. Run `gcloud auth login --update-adc` " 36 "and check that the project is set correctly." 37 ) 38 sys.exit(1) 39 40 commands = { 41 "namespaces": namespaces, 42 "lookml": lookml, 43 "update-spoke": update_spoke, 44 } 45 46 @click.group(commands=commands) 47 def group(): 48 """CLI interface for lookml automation.""" 49 50 warnings.filterwarnings( 51 "ignore", 52 "Your application has authenticated using end user credentials", 53 module="google.auth._default", 54 ) 55 56 group(prog_name=prog_name)

Generate and run CLI.