Kuma Report, October 2017

Here’s what happened in October in Kuma, the engine of MDN:

- MDN Migrated to AWS

- Continued Migration of Browser Compatibility Data

- Shipped tweaks and fixes

Here’s the plan for November:

- Ship New Compat Table to Beta Users

- Improve Performance of MDN and the Interactive Editor

- Update Localization of KumaScript Macros

I’ve also included an overview of the AWS migration project, and an introduction to our new AWS infrastructure in Kubernetes, which helps make this the longest Kuma Report yet.

Done in October

MDN Migrated to AWS

On October 10, we moved MDN from Mozilla’s SCL3 datacenter to a

Kubernetes cluster in

the AWS us-west2 (Oregon) region. The database move went well, but we

needed five times the web resources as the maintenance mode tests. We were

able to smoothly scale up in the four hours we budgeted for the

migration.

Dave Parfitt and

Ryan Johnson did a great job implementing

a flexible set of deployment tools and monitors, that allowed us to quickly

react to and handle the unexpected load.

The extra load was caused by mdn.mozillademos.org, which serves user uploads and wiki-based code samples. These untrusted resources are served from a different domain so that browsers will protect MDN users from the worst security issues. I excluded these resources from the production traffic tests, which turned out to be a mistake, since they represent 75% of the web traffic load after the move.

Ryan and I worked to get this domain behind a CDN. This included avoiding a

Vary: Cookie header that was being added to all responses

(PR 4469), and adding

caching headers to each endpoint

(PR 4462 and

PR 4476).

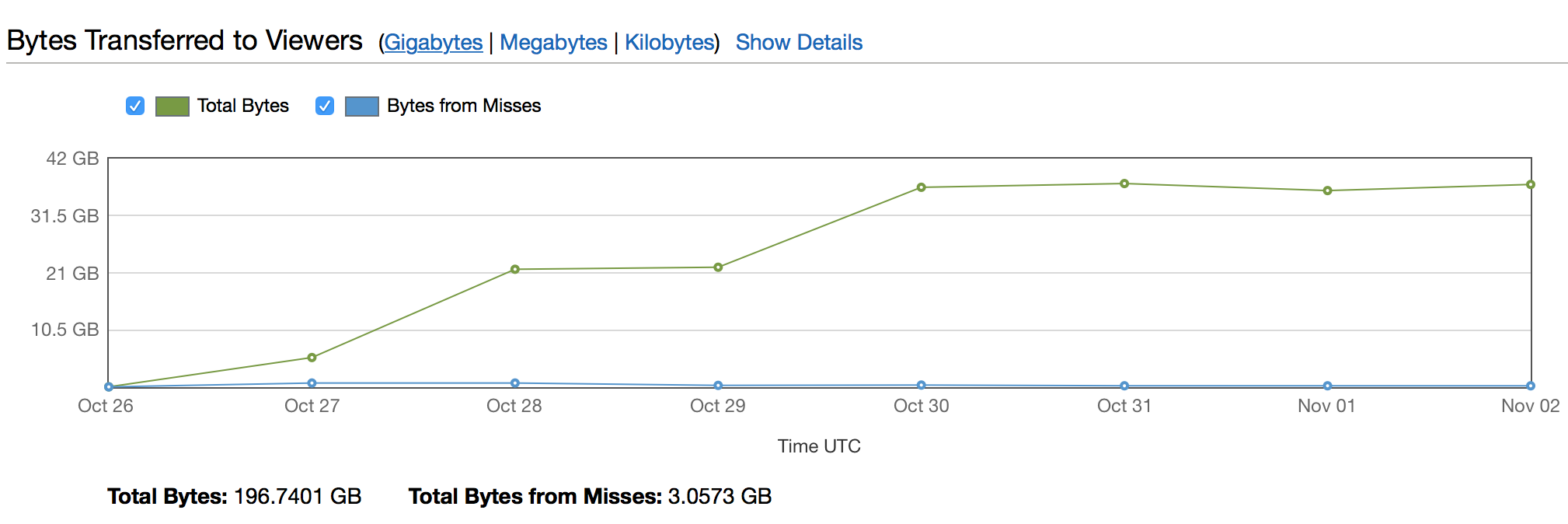

We added CloudFront to the domain on October 26. Now most of these resources are served from the CloudFront CDN, which is fast and often closer to the MDN user (for example, served to French users from a server in Europe rather than California). Over a week, 197 GB was served from the CDN, versus 3 GB (1.5%) served from Kuma.

There is a reduced load on Kuma as well. The CDN can handle many requests, so

Kuma doesn’t see them at all. The CDN periodically checks with Kuma that content

hasn’t changed, which often requires a short 304 Not Modified rather than

the full response.

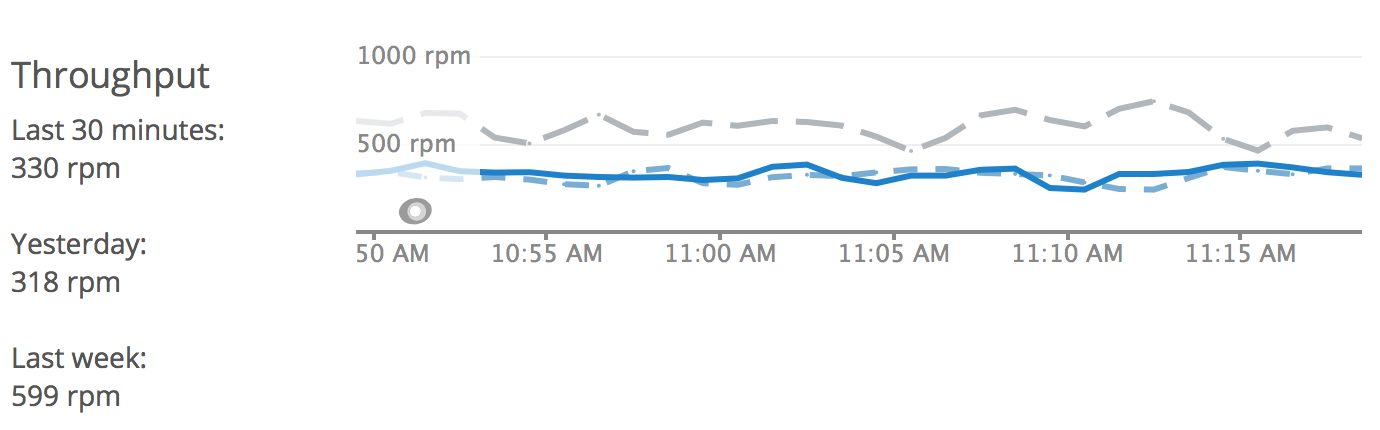

Backend requests for attachments have dropped by 45%:

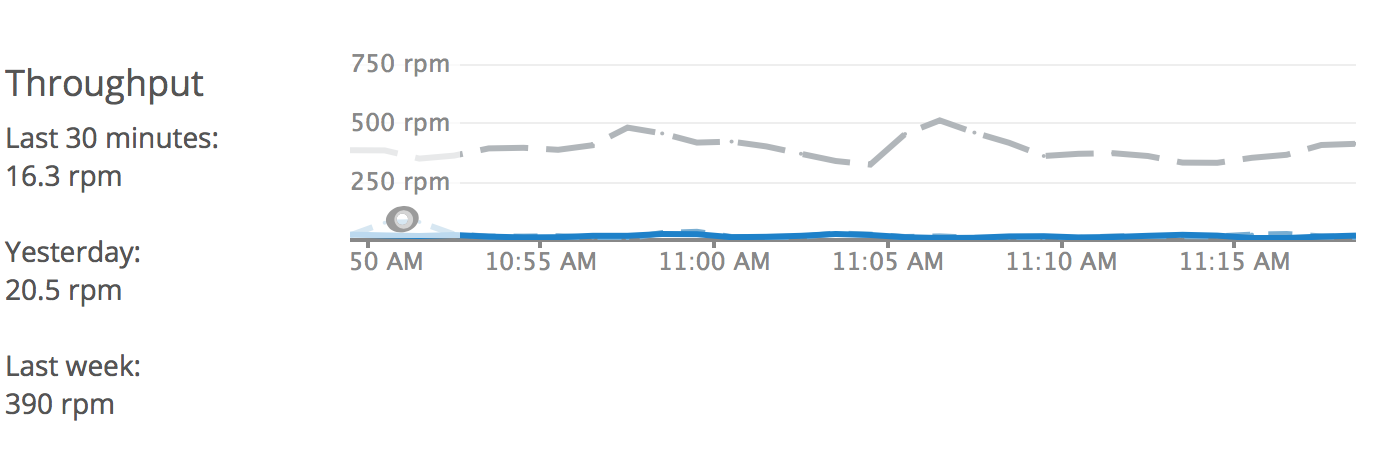

Code samples requests have dropped by 96%:

We continue to use a CDN for our static assets, but not for developer.mozilla.org itself. We’d have to do similar work to add caching headers, ideally splitting anonymous content from logged-in content. The untrusted domain had 4 endpoints to consider, while developer.mozilla.org has 35 to 50. We hope to do this work in 2018.

Continued Migration of Browser Compatibility Data

The Browser Compatibility Data project was the most active MDN project in October. Another 700 MDN pages use the BCD data, bringing us up to 2200 MDN pages, or 35.5% of the pages with compatibility data.

Daniel D. Beck continues migrating the CSS data, which will take at least the rest of 2017. wbamberg continues to update WebExtension and API data, which needs to keep up with browser releases. Chris Mills migrated the Web Audio data with 32 PRs, starting with PR 433. This data includes mixin interfaces, and prompted some discussion about how to represent them in BCD in issue #472.

Florian Scholz added MDN URLs in PR 344, which will help BCD integrators to link back to MDN for more detailed information.

Browser names and versions are an important part of the compatibility data, and Florian and Jean-Yves Perrier worked to formalize their representation in BCD. This includes standardization of the first version, preferring “33” to “33.0” (PR 447 and more), and fixing some invalid version numbers (PR 449 and more). In November, BCD will add more of this data, allowing automated validation of version data, and enabling some alternate ways to present compat data.

Florian continues to release a new NPM package each Monday, and enabled tag-based releases (PR 565) for the most recent 0.0.12 release. mdn-browser-compat-data had over 900 downloads last month.

Shipped Tweaks and Fixes

There were 276 PRs merged in October:

- 131 mdn/browser-compat-data PRs

- 42 mozilla/kuma PRs

- 35 mdn/kumascript PRs

- 32 mozmeao/infra PRs

- 23 mdn/data PRs

- 13 mdn/interactive-examples PRs

Many of these were from external contributors, including several first-time contributions. Here are some of the highlights:

- Edge doesn’t support

isIntersecting(BCD PR 430), from first-time contributor Ivan Čurić. - Add the PointerEvent api (BCD PR 484), from first-time contributor lpd-au.

- Split WebExt JSONs (BCD PR 488), from wbamberg.

- PointerEvents: Remove parentheses and add mdn_urls (BCD PR 491, issue #490), first of 3 PRs from first-time BCD contributor Maton Anthony.

- Change in

frame-ancestorssupport (BCD PR 505, Bug 1380755), from first-time contributor Jason Tarka. - Update let keyword notes (BCD PR 517), from first-time BCD contributor jsx.

- Update regex anchored sticky flag compatibility (BCD PR 561), from first-time contributor John Lenz.

- Update IE and Edge version compatibility for the button form attribute (BCD PR 566), from first-time contributor cb-josh-c.

- Add dns-prefetch, preconnect for editor iframe (Kuma PR 4455, issue #238), from Schalk Neethling.

- Switch docs to alabaster theme (Kuma PR 4457), from John Whitlock.

- Reduce padding and change bgcolor on inline code (Kuma PR 4471), from Stephanie Hobson.

- Prevent ISE when no file in cleaned data (Kuma PR 4475, bug 1410559), from Ryan Johnson.

- Fix lazy loading fonts (Kuma PR 4482, bug 1150118), from Stephanie Hobson.

- Delete CSSDataTypes.ejs (KumaScript PR 337), from mfluehr.

- Typo in GamepadEventProperties.ejs (KumaScript PR 341), from first-time contributor 즈눅.

- Add missing written articles to LearnSidebar (Client-side Web APIs) (KumaScript PR 347), from first-time KS contributor Jean-Yves Perrier.

- Add translations for French (fr) locale (KumaScript PR 353), from first-time contributor Kévin C.

- Added some Spanish translations (KumaScript PR 376), from first-time contributor Juan Ferrer Toribio.

- Datadog implementation for MDN Redis monitoring (Infra PR 561), from Dave Parfitt.

- Production configs for go-live day (Infra PR 565), from Ryan Johnson.

- MDN MM Frankfurt support (Infra PR 606), from Dave Parfitt.

- Add k8s job for doing MDN database migrations (Infra PR 610), from Ryan Johnson.

- Change MDN CDN origin to developer.mozilla.org (Infra PR 624), from Dave Parfitt.

- Add futuristic ASCII art diagram for MDN DNS (Infra PR 628), from Dave Parfitt.

- Update Fragmentation-related properties (Data PR 111), first of 15 Data PRs from mfluehr.

- Add inheritance file (from

{{InterfaceData}}) and its schema (Data PR 122), from first-time Data contributor Jean-Yves Perrier. - Add font-variation-settings descriptor to @font-face (Data PR 125), from first-time Data contributor jsx.

- Fix broken reference to units.md (Data PR 137), from first-time contributor Jonathan Neal.

- Copy edit of the readme file (Interactive Examples PR 297), from first-time Interactive Examples contributor Chris Mills.

- Further performance improvements, and adds Jest for testing (Interactive Examples PR 317), from Schalk Neethling.

Planned for November

Ship New Compat Table to Beta Users

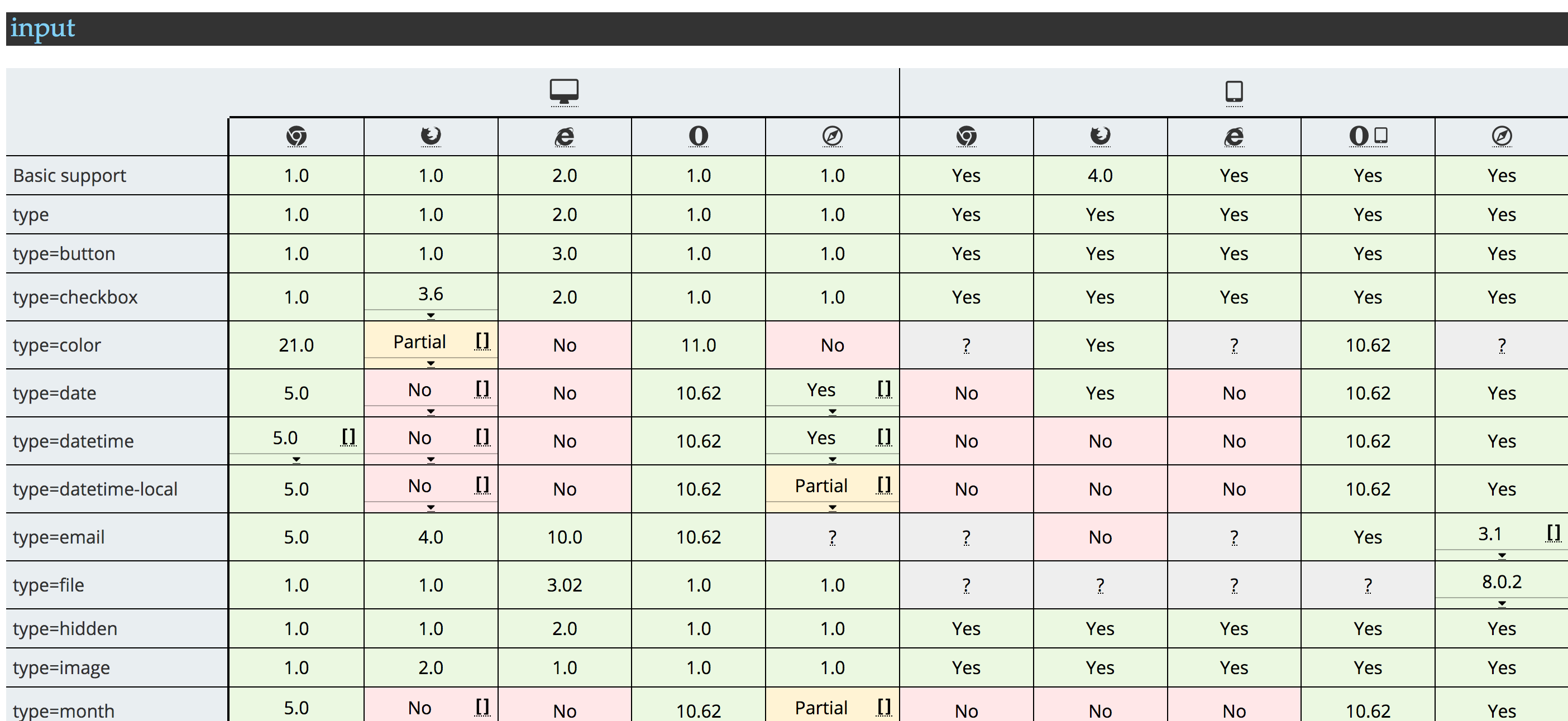

Stephanie Hobson and Florian are collaborating on a new compat table design for MDN, based on the BCD data. The new format summarizes support across desktop and mobile browsers, while still allowing developers to dive into the implementation details. We’ll ship this to beta users on 2200 MDN pages in November. See Beta Testing New Compatability Tables on Discourse for more details.

Improve Performance of MDN and the Interactive Editor

Page load times have increased with the move to AWS. We’re looking into ways to increase performance across MDN. You can follow our MDN Post-migration project for more details. We also want to enable the interactive editor for all users, but we’re concerned about further increasing page load times. You can follow the remaining issues in the interactive-examples repo.

Update Localization of KumaScript Macros

In August, we planned the toolkit we’d use to extract strings from KumaScript macros (see bug 1340342). We put implementation on hold until after the AWS migration. In November, we’ll dust off the plans and get some sample macros converted. We’re hopeful the community will make short work of the rest of the macros.

MDN in AWS

The AWS migration project started in November 2014, bug 1110799. The original plan was to switch by summer 2015, but the technical and organizational hurdles proved harder than expected. At the same time, the team removed many legacy barriers making Kuma hard to migrate. A highlight of the effort was the Mozilla All Hands in December 2015, where the team merged several branches of work-in-progress code to get Kuma running in Heroku. Thanks to Jannis Leidel, Rob Hudson, Luke Crouch, Lonnen, Will Kahn-Greene, David Walsh, James Bennet, cyliang, Jake, Sean Rich, Travis Blow, Sheeri Cabral, and everyone else who worked on or influenced this first phase of the project.

The migration project rebooted in Summer 2016. We switched to targeting Mozilla Marketing’s deployment environment. I split the work into smaller steps leading up to AWS. I thought each step would take about a month. They took about 3 months each. Estimating is hard.

Changes to MDN Services

MDN no longer uses Apache to serve files and proxy Kuma. Instead, Kuma serves requests directly with gunicorn with the meinheld worker. I did some analysis in January, and Dave Parfitt and Ryan Johnson led the effort to port Apache features to Kuma:

- Redirects are implemented with Paul McLanahan’s django-redirect-urls.

- Static assets (CSS, JavaScript, etc.) are served directly with WhiteNoise.

- Kuma handles the domain-based differences between the main website and the untrusted domain.

- Miscellaneous files like robots.txt, sitemaps, and legacy files (from the early days of MDN) are served directly.

- Kuma adds security headers to responses.

Another big change is how the services are run. The base unit of implementation in SCL3 was multi-purpose virtual machines (VMs). In AWS, we are switching to application-specific Docker containers.

In SCL3, the VMs were split into 6 user-facing web servers and 4 backend Celery servers. In AWS, the EC2 servers act as Docker hosts. Docker uses operating system virtualization, which has several advantages over machine virtualization for our use cases. The Docker images are distributed over the EC2 servers, as chosen by Kubernetes.

The SCL3 servers were maintained as long-running servers, using Puppet to install security updates and new software. The servers were multi-purpose, used for Kuma, KumaScript, and backend Celery processes. With Docker, we instead use a Python/Kuma image and a node.js/KumaScript image to implement MDN.

The Python/Kuma image is configurable through environment variables to run in different domains (such as staging or production), and to be configured as one of our three main Python services:

- web - User-facing Kuma service

- celery - Backend Kuma processes outside of the request loop

- api - A backend Kuma service, used by KumaScript to render pages. This avoids an issue in SCL3 where KumaScript API calls were competing with MDN user requests.

Our node.js/KumaScript service is also configured via environment variables, and implements the fourth main service of MDN:

- kumascript - The node.js service that renders wiki pages

Building the Docker images involves installing system software, installing the latest code, creating the static files, compiling translations, and preparing other run-time assets. AWS deployments are the relatively fast process of switching to newer Docker images. This is an improvement over SCL3, which required doing most of the work during deployment while developers watched.

An Introduction to Kubernetes

Kubernetes is a system for automating the deployment, scaling, and management of containerized applications. Kubernetes’s view of MDN looks like this:

A big part of understanding Kubernetes is learning the vocabulary. Kubernetes Concepts is a good place to start. Here’s how some of these concepts are implemented for MDN:

- Ten EC2 instances in AWS are configured as

Nodes, and

joined into a Kubernetes Cluster. Our “Portland Cluster” is in the

us-west2(Oregon) AWS region. Nine Nodes are available for application usage, and the master Node runs the Cluster. - The

mdn-prodNamespace collects the resources that need to collaborate to make MDN work. Themdn-stageNamespace is also in the Portland Cluster, as well as other Mozilla projects. - A Service

defines a service provided by an application at a TCP port. For example,

a webserver provides an HTTP service on port 80.

- The

webservice is connected to the outside world via an AWS Elastic Load Balancer (ELB), which can reach it at https://developer.mozilla.org (the main site) and https://mdn.mozillademos.org (the untrusted resources). - The

apiandkumascriptservices are available inside the cluster, but not routed to the outside world. celerydoesn’t accept HTTP requests, and so it doesn’t get a Service.

- The

- The application that provides a service is defined by a Deployment, which declares what Docker image and tag will be used, how many replicas are desired, the CPU and memory budget, what disk volumes should be mounted, and what the environment configuration should be.

- A Kubernetes Deployment is a higher-level object, implemented with a

ReplicaSet,

which then starts up several

Pods

to meet the demands. ReplicaSets are named after the Service plus a random

number, such as

web-61720, and the Pods are named after the ReplicaSets plus a random string, likeweb-61720-s7l.

ReplicaSets and Pods come into play when new software is rolled out. The Deployment creates a new ReplicaSet for the desired state, and creates new Pods to implement it, while it destroys the Pods in the old ReplicaSet. This rolling deployment ensures that the application is fully available while new code and configurations are deployed. If something is wrong with the new code that makes the application crash immediately, the deployment is cancelled. If it goes well, the old ReplicaSet is kept around, making it easier to rollback for subtler bugs.

This deployment style puts the burden on the developer to ensure that the two versions can run at the same time. Caution is needed around database changes and some interface changes. In exchange, deployments are smooth and safe with no downtime. Most of the setup work is done when the Docker images are created, so deployments take about a minute from start to finish.

Kubernetes takes control of deploying the application and ensures it keeps running. It allocates Pods to Nodes (called Scheduling), based on the CPU and memory budget for the Pod, and the existing load on each Node. If a Pod terminates, due to an error or other cause, it will be restarted or recreated. If a Node fails, replacement Pods will be created on surviving Nodes.

The Kubernetes system allows several ways to scale the application. We used some for handling the unexpected load of the user attachments:

- We went from 10 to 11 Nodes, to increase the total capacity of the Cluster.

- We scaled the

webDeployment from 6 to 20 Pods, to handle more simultaneous connections, including the slow file requests. - We scaled the

celeryDeployment from 6 to 10 Pods, to handle the load of populating the cold cache. - We adjusted the

gunicornworker threads from 4 to 8, to increase the simultaneous connections - We rolled out new code to improve caching

There are many more details, which you can explore by reading our configuration files in the infra repo. We use Jinja for our templates, which we find more readable than the Go templates used by many Kubernetes projects. We’ll continue to refine these as we adjust and improve our infrastructure. You can see our current tasks by following the MDN Post-migration project.