Application Services Rust Components

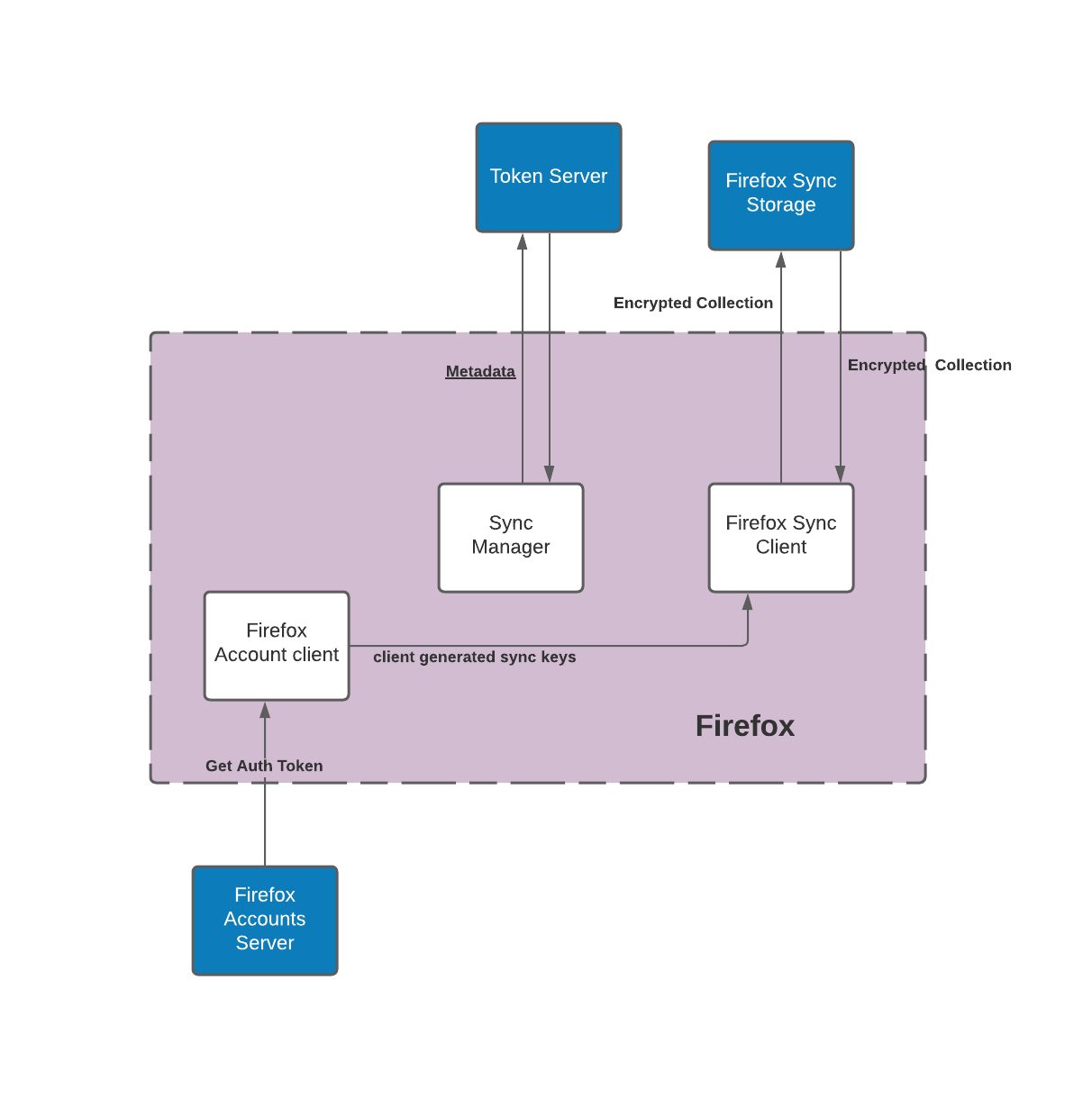

Application Services is collection of Rust Components. The components are used to enable Firefox, and related applications to integrate with Firefox accounts, sync and enable experimentation. Each component is built using a core of shared code written in Rust, wrapped with native language bindings for different platforms.

Contact us

To contact the Application Services team you can:

- Find us in the chat #rust-components:mozilla.org (How to connect)

- To report issues with sync on Firefox Desktop, file a bug in Bugzilla for Firefox :: Sync

- To report issues with our components, file an issue in the GitHub issue tracker

The source code is available on GitHub.

License

The Application Services Source Code is subject to the terms of the Mozilla Public License v2.0. You can obtain a copy of the MPL at https://mozilla.org/MPL/2.0/.

Contributing to Application Services

Anyone is welcome to help with the Application Services project. Feel free to get in touch with other community members on Matrix or through issues on GitHub.

Participation in this project is governed by the Mozilla Community Participation Guidelines.

Bug Reports

You can file issues on GitHub. Please try to include as much information as you can and under what conditions you saw the issue.

Building the project

Build instructions are available in the building page. Please let us know if you encounter any pain-points setting up your environment.

Finding issues

Below are a few different queries you can use to find appropriate issues to work on. Feel free to reach out if you need any additional clarification before picking up an issue.

- good first issues - If you are a new contributor, search for issues labeled

good-first-issue - good second issues - Once you’ve got that first PR approved and you are looking for something a little more challenging, we are keeping a list of next-level issues. Search for the

good-second-issuelabel. - papercuts - A collection of smaller sized issues that may be a bit more advanced than a first or second issue.

- important, but not urgent - For more advanced contributors, we have a collection of issues that we consider important and would like to resolve sooner, but work isn’t currently prioritized by the core team.

Sending Pull Requests

Patches should be submitted as pull requests (PRs).

When submitting PRs, We expect external contributors to push patches to a fork of

application-services. For more information about submitting PRs from forks, read GitHub’s guide.

Before submitting a PR:

- Your patch should include new tests that cover your changes, or be accompanied by explanation for why it doesn’t need any. It is your and your reviewer’s responsibility to ensure your patch includes adequate tests.

- Consult the testing guide for some tips on writing effective tests.

- Your code should pass all the automated tests before you submit your PR for review.

- Before pushing your changes, run

./automation/tests.py changes. The script will calculate which components were changed and run test suites, linters and formatters against those components. Because the script runs a limited set of tests, the script should execute in a fairly reasonable amount of time.

- Before pushing your changes, run

- Your patch should include a changelog entry in CHANGELOG.md or an explanation of why it does not need one. Any breaking changes to Swift or Kotlin binding APIs should be noted explicitly.

- If your patch adds new dependencies, they must follow our dependency management guidelines. Please include a summary of the due diligence applied in selecting new dependencies.

- After you open a PR, our Continuous Integration system will run a full test suite. It’s possible that this step will result in errors not caught with the script so make sure to check the results.

- “Work in progress” pull requests are welcome, but should be clearly labeled as such and should not be merged until all tests pass and the code has been reviewed.

- You can label pull requests as “Work in progress” by using the Github PR UI to indicate this PR is a draft (learn more about draft PRs).

When submitting a PR:

- You agree to license your code under the project’s open source license (MPL 2.0).

- Base your branch off the current

mainbranch. - Add both your code and new tests if relevant.

- Please do not include merge commits in pull requests; include only commits with the new relevant code.

- We encourage you to GPG sign your commits.

Code Review

This project is production Mozilla code and subject to our engineering practices and quality standards. Every patch must be peer reviewed by a member of the Application Services team.

Building Application Services

When working on Application Services, it’s important to set up your environment for building the Rust code and the Android or iOS code needed by the application.

First time builds

Building for the first time is more complicated than a typical Rust project. To build for an end-to-end experience that enables you to test changes in client applications like Firefox for Android (Fenix) and Firefox iOS, there are a number of build systems required for all the dependencies. The initial setup is likely to take a number of hours to complete.

Building the Rust Components

Complete this section before moving to the android/iOS build instructions.

- Make sure you cloned the repository:

$ git clone https://github.com/mozilla/application-services # (or use the ssh link)

$ cd application-services

$ git submodule update --init --recursive

-

Install Rust: install via rustup

-

Install your system dependencies:

Linux

-

Install the system dependencies required for building NSS

- Install gyp:

apt install gyp(required for NSS) - Install ninja-build:

apt install ninja-build - Install python3 (at least 3.6):

apt install python3 - Install zlib:

apt install zlib1g-dev - Install perl (needed to build openssl):

apt install perl - Install patch (to build the libs):

apt install patch

- Install gyp:

-

Install the system dependencies required for SQLcipher

- Install tcl:

apt install tclsh(required for SQLcipher)

- Install tcl:

-

Install the system dependencies required for bindgen

- Install libclang:

apt install libclang-dev

- Install libclang:

MacOS

- Install Xcode: check the ci config for the correct version.

- Install Xcode tools:

xcode-select --install - Install homebrew via its installation instructions (it’s what we use for ci).

- Install the system dependencies required for building NSS:

- Install ninja and python:

brew install ninja python - Make sure

which python3maps to the freshly installed homebrew python.- If it isn’t, add the following to your bash/zsh profile and

sourcethe profile before continuing:alias python3=$(brew --prefix)/bin/python3 - Ensure

pythonmaps to the same Python version. You may have to create a symlink:PYPATH=$(which python3); ln -s $PYPATH `dirname $PYPATH`/python

- If it isn’t, add the following to your bash/zsh profile and

- Install gyp:

wget https://bootstrap.pypa.io/ez_setup.py -O - | python3 - git clone https://chromium.googlesource.com/external/gyp.git ~/tools/gyp cd ~/tools/gyp pip install .- Add

~/tools/gypto your path:export PATH="~/tools/gyp:$PATH" - If you have additional questions, consult this guide.

- Add

- Make sure your homebrew python’s bin folder is on your path by updating your bash/zsh profile with the following:

export PATH="$PATH:$(brew --prefix)/opt/python@3.9/Frameworks/Python.framework/Versions/3.9/bin"

- Install ninja and python:

Windows

Install windows build tools

Why Windows Subsystem for Linux (WSL)?

It’s currently tricky to get some of these builds working on Windows, primarily due to our use of SQLcipher. By using WSL it is possible to get builds working, but still have them published to your “native” local maven cache so it’s available for use by a “native” Android Studio.

- Install WSL (recommended over native tooling)

- Install unzip:

sudo apt install unzip - Install python3:

sudo apt install python3Note: must be python 3.6 or later - Install system build tools:

sudo apt install build-essential - Install zlib:

sudo apt-get install zlib1g-dev - Install tcl:

sudo apt install tcl-dev

-

-

Check dependencies and environment variables by running:

./libs/verify-desktop-environment.sh

Note that this script might instruct you to set some environment variables, set those by adding them to your

.zshrcor.bashrcso they are set by default on your terminal. If it does so instruct you, you must run the command again after setting them so the libraries are built.

- Run cargo test:

cargo test

Once you have successfully run ./libs/verify-desktop-environment.sh and cargo test you can move to the Building for Fenix and Building for iOS sections below to setup your local environment for testing with our client applications.

Building for Fenix

The following instructions assume that you are building application-services for Fenix, and want to take advantage of the

Fenix Auto-publication workflow for android-components and application-services.

- Install Android SDK, JAVA, NDK and set required env vars

- Clone the firefox-android repository (not inside the Application Service repository).

- Install Java 17 for your system

- Set

JAVA_HOMEto point to the JDK 17 installation directory. - Download and install Android Studio.

- Set

ANDROID_SDK_ROOTandANDROID_HOMEto the Android Studio sdk location and add it to your rc file (either.zshrcor.bashrcdepending on the shell you use for your terminal). - Configure the required versions of NDK

Configure menu > System Settings > Android SDK > SDK Tools > NDK > Show Package Details > NDK (Side by side)- 29.0.14206865 (required by Application Services, as configured)

- If you are on Windows using WSL - drop to the section below, Windows setup for Android (WSL) before proceeding.

- Check dependencies, environment variables

- Run

./libs/verify-android-environment.sh - Follow instructions and rerun until it is successful.

- Run

Windows setup for Android (via WSL)

Note: For non-Ubuntu linux versions, it may be necessary to execute $ANDROID_HOME/tools/bin/sdkmanager "build-tools;26.0.2" "platform-tools" "platforms;android-26" "tools". See also this gist for additional information.

Configure Maven

Configure maven to use the native windows maven repository - then, when doing ./gradlew install from WSL, it ends up in the Windows maven repo. This means we can do a number of things with Android Studio in “native” windows and have then work correctly with stuff we built in WSL.

- Install maven:

sudo apt install maven - Confirm existence of (or create) a

~/.m2folder - In the

~/.m2create a file calledsettings.xml - Add the content below replacing

{username}with your username:

<settings>

<localRepository>/mnt/c/Users/{username}/.m2/repository</localRepository>

</settings>

Building for Firefox iOS

-

Install xcpretty:

gem install xcpretty -

Run

./libs/verify-ios-environment.shto check your setup and environment variables.- Make any corrections recommended by the script and re-run.

-

Run

./automation/build_ios_artifacts.shto build all the binaries, UniFFi bindings, Glean metrics generationNote: The artifacts generated in the above steps can then be used in any consuming product

Locally building Firefox iOS against a local Application Services

Detailed steps to build Firefox iOS against a local application services can be found this document

Using locally published components in Fenix

It’s often important to test work-in-progress changes to Application Services components against a real-world consumer project. The most reliable method of performing such testing is to publish your components to a local Maven repository, and adjust the consuming project to install them from there.

With support from the upstream project, it’s possible to do this in a single step using our auto-publishing workflow.

Using the auto-publishing workflow

mozilla-central has support for automatically publishing and including a local development version of application-services in the build. This is supported for most of the Android targets available in mozilla-central including Fenix - this doc will focus on Fenix, but the same general process is used for all. The workflow is:

Pre-requisites:

- Ensure you have a regular build of application-services working.

- Disable the gradle cache in mozilla-central - edit

./gradle.properties, comment outorg.gradle.configuration-cache=true - Ensure you have a regular build of Fenix from mozilla-central testable in Android Studio or an emulator.

Setup 2 x local.properties

Edit (or create) the file local.properties in each of the repos:

app-services

In the root of the app-services repo:

Please be sure you have read our guide to building Fenix and successfully built

using the instructions there. In particular, this may lead you to adding sdk.dir and ndk.dir properties, and/or

set environment variables ANDROID_SDK_ROOT and ANDROID_HOME.

In addition to those instructions, you will need:

rust.targets

Both the auto-publishing and manual workflows can be sped up significantly by

using the rust.targets property which limits which architectures the Rust

code gets build against. Adding a line like

rust.targets=x86,linux-x86-64. The trick is knowing which targets to put in

that comma separated list:

- Use

x86for running the app on most emulators on Intel hardware (in rare cases, when you have a 64-bit emulator, you’ll wantx86_64). - Use

arm64for emulators running on Apple Silicon Macs. - If you’re running the

android-componentsorfenixunit tests, then you’ll need the architecture of your machine:- OSX running Intel chips:

darwin-x86-64 - OSX running M1 chips:

darwin-aarch64 - Linux:

linux-x86-64

- OSX running Intel chips:

eg, on a Mac your local.properties file will have a single line, rust.targets=darwin-aarch64,arm64

mozilla-central

local.properties can be in the root of the mozilla-central checkout,

or in the project specific directory (eg, mobile/android/fenix) and you tell it where to

find your local checkout of application-services by adding a line like:

autoPublish.application-services.dir=path/to/your/checkout/of/application-services

Note that the path can be absolute or relative from local.properties. For example, if application-services

and mozilla-central are at the same level, and you are using a local.properties in the root of mozilla-central,

the relative path would be ../application-services

Build and test your Fenix again.

After configuring as described above, build and test your Fenix again.

If all goes well, this should automatically build your checkout of application-services, publish it

to a local maven repository, and configure the consuming project to install it from there instead of

from our published releases.

Other notes

Using Windows/WSL

Good luck! This implies you are also building mozilla-central in a Windows/WSL environment; please contribute docs if you got this working.

However, there’s an excellent chance that you will need to execute

./automation/publish_to_maven_local_if_modified.py from your local application-services root.

Caveats

- This assumes you are able to build both Fenix and application-services directly before following any of these instructions.

- Make sure you’re fully up to date in all repos, unless you know you need to not be.

- Contact us if you get stuck.

How to locally test Swift Package Manager components on Firefox iOS

This guide explains how to build and test Firefox iOS against a local Application Services checkout. For background on our Swift Package approach, see the ADR.

At a glance

Goal: Build a local Firefox iOS against a local Application Services.

Current workflow (recommended):

- Build an XCFramework from your local

application-services. - Point Firefox iOS’s local Swift package (

MozillaRustComponents/Package.swift) at that artifact (either an HTTPS URL + checksum, or a localpath:). - Update UniFFI generated swift source files.

- Reset package caches in Xcode and build Firefox iOS.

A legacy flow that uses the rust-components-swift package is documented at the end while we’re in mid-transition to the new system.

Prerequisites

- A local checkout of Firefox iOS that builds: https://github.com/mozilla-mobile/firefox-ios#building-the-code

- A local checkout of Application Services prepared for iOS builds: see Building for Firefox iOS

Step 1 — Build all artifacts needed from Application Services.

This step builds an XCFramework and also generates swift sources for the components with UniFFI.

From the root of your application-services checkout, execute:

./automation/build_ios_artifacts.sh

This produces:

megazords/ios-rust/MozillaRustComponents.xcframework, containing:- The compiled Rust code as a static library (for all iOS targets)

- C headers and Swift module maps for the components

megazords/ios-rust/MozillaRustComponents.xcframework.zip, which is a zip of the above directory. By default, firefox-ios consumes the .zip file, although as we discuss below, we will change firefox-ios to use the directory itself.megazords/ios-rust/Sources/MozillaRustComponentsWrapper/Generated/*.swift, which are the source files generated by UniFFI.

Step 2 — Point Firefox iOS to your local artifact

Firefox iOS consumes Application Services via a local Swift package in-repo at:

{path-to-firefox-ios}/MozillaRustComponents/Package.swift

update it as described below:

- Switch the binaryTarget to a local path - note however that the path can’t be absolute but must be relative to

Package.swift. For example, if you haveapplication-serviceschecked out next to thefirefox-iosrepo, a suitable relative path might be../../application-services/megazords/ios-rust/MozillaRustComponents.xcframework - Comment out or remove the

urlandchecksumelements - they aren’t needed when pointing to a local path:

// In firefox-ios/MozillaRustComponents/Package.swift

.binaryTarget(

name: "MozillaRustComponents",

// ** Comment out the existing `url` and `checksum` entries

// url: url,

// checksum: checksum

// ** Add a new `path` entry.

path: "../../application-services/megazords/ios-rust/MozillaRustComponents.xcframework"

)

- Update UniFFI generated files: manually copy

./megazords/ios-rust/Sources/MozillaRustComponentsWrapper/Generated/*.swiftfrom yourapplication-servicesdirectory to the existing./MozillaRustComponents/Sources/MozillaRustComponentsWrapper/Generated/.directory in yourfirefox-ioscheckout.

for example, from the root of your application-services directory:

cp ./megazords/ios-rust/Sources/MozillaRustComponentsWrapper/Generated/*.swift ../firefox-ios/MozillaRustComponents/Sources/MozillaRustComponentsWrapper/Generated/.

Step 3 — Reset caches and build

In Xcode:

- File → Packages → Reset Package Caches

- (If needed) File → Packages → Update to Latest Package Versions

- Product → Clean Build Folder, then build and run Firefox iOS.

If you still see stale artifacts (rare), delete:

~/Library/Caches/org.swift.swiftpm

~/Library/Developer/Xcode/DerivedData/*

…and build again.

Using an external XCFramework artifact.

The instructions above all assume you have an XCFramework you built locally. However, it might be

the case where you want to use a MozillaRustComponents.xcframework.zip from an HTTPS-accessible URL

(e.g., a Taskcluster or GitHub artifact URL).

In this scenario, you also need the checksum of the zip file - this can be obtained by executing

swift package compute-checksum megazords/ios-rust/MozillaRustComponents.xcframework.zip

However, that means you need to have the .zip file locally - in which case you might as well unzip it and use the instructions above for consuming it from a local path!

But for completeness, once you have a https URL and the checksum you should

edit MozillaRustComponents/Package.swift and set the binaryTarget to the zip URL and checksum:

// In firefox-ios/MozillaRustComponents/Package.swift

.binaryTarget(

name: "MozillaRustComponents",

url: "https://example.com/path/MozillaRustComponents.xcframework.zip",

checksum: "<sha256 from `swift package compute-checksum`>"

)

Note: Every time you produce a new zip, you must update the checksum.

Note: The above instructions do not handle copying updated files generated by UniFFI. If you have changed the public API of any components, this process as written will not work.

Disabling local development

To revert quickly:

- Restore your changes to MozillaRustComponents/Package.swift (e.g., git checkout – MozillaRustComponents/Package.swift).

- Reset Package Caches in Xcode.

- Build Firefox iOS.

Troubleshooting

- Old binary still in use: Reset caches and clear DerivedData, then rebuild.

- Branch switches in application-services: Rebuild the XCFramework and update the package reference (URL/checksum or path:).

- Checksum mismatch (URL mode): Run swift package compute-checksum on the new zip and update Package.swift.

- Build script issues: Re-run ./build-xcframework.sh from megazords/ios-rust.

Legacy: using rust-components-swift (remote package)

[!WARNING] Status: rust-components-swift is deprecated for Firefox iOS. Prefer the local package at MozillaRustComponents/ unless you must validate against the legacy package for a specific task.

Some teams may still need the legacy flow temporarily. Historically, Firefox iOS consumed Application Services through the rust-components-swift package. To test locally with that setup:

- Build the XCFramework from application-services.

- In a local checkout of rust-components-swift, point its Package.swift to the local path of the unzipped XCFramework:

.binaryTarget( name: "MozillaRustComponents", path: "./MozillaRustComponents.xcframework" ) - Commit the changes in rust-components-swift (Xcode only reads committed package content).

- In Firefox iOS, replace the package dependency with a local reference to your rust-components-swift checkout (e.g., via Xcode’s “Add Local…” in Package Dependencies).

How to locally test Swift Package Manager components on Focus iOS

This is a guide on testing the Swift Package Manager component locally against a local build of Focus iOS. For more information on our Swift Package Manager design, read the ADR that introduced it

NOTE - This guide is slightly out of date and needs to be updated. Better instructions can be found in the instructions for Firefox, but that process needs some Focus-specific tweaks.

This guide assumes the component you want to test is already distributed with the

rust-components-swiftrepository, you can read the guide for adding a new component if you would like to distribute a new component.

To test a component locally, you will need to do the following:

- Build an xcframework in a local checkout of

application-services - Include the xcframework in a local checkout of

rust-components-swift - Run the

make-tagscript inrust-components-swiftusing a local checkout ofapplication-services - Include the local checkout of

rust-components-swiftinFocus

Below are more detailed instructions for each step

Building the xcframework

To build the xcframework do the following:

- In a local checkout of

application-services, navigate tomegazords/ios-rust/ - Run the

build-xcframework.shscript:

$ ./build-xcframework.sh --focus

This will produce a file name FocusRustComponents.xcframework.zip in the focus directory that contains the following, built for all our target iOS platforms.

- The compiled Rust code for all the crates listed in

Cargo.tomlas a static library - The C header files and Swift module maps for the components

Include the xcframework in a local checkout of rust-components-swift

After you generated the FocusRustComponents.xcframework.zip in the previous step, do the following to include it in a local checkout of rust-components-swift:

- clone a local checkout of

rust-components-swift, not inside theapplication-servicesrepository:git clone https://github.com/mozilla/rust-components.swift.git - Unzip the

FocusRustComponents.xcframework.zipinto therust-components-swiftrepository: (Assuming you are in the root of therust-components-swiftdirectory andapplication-servicesis a neighbor directory)unzip -o ../application-services/megazords/ios-rust/focus/FocusRustComponents.xcframework.zip -d . - Change the

Package.swift’s reference to the xcframework to point to the unzippedFocusRustComponents.xcframeworkthat was created in the previous step. You can do this by uncommenting the following line:

and commenting out the following lines:path: "./FocusRustComponents.xcframework"url: focusUrl, checksum: focusChecksum,

Run the generation script with a local checkout of application services

For this step, run the following script from inside the rust-components-swift repository (assuming that application-services is a neighboring directory to rust-components-swift).

./generate.sh ../application-services

Once that is done, stage and commit the changes the script ran. Xcode can only pick up committed changes.

Include the local checkout of rust-components-swift in Focus

This is the final step to include your local changes into Focus. Do the following steps:

-

Clone a local checkout of

Focusif you haven’t already. Make sure you also install the project dependencies, more information in their build instructions -

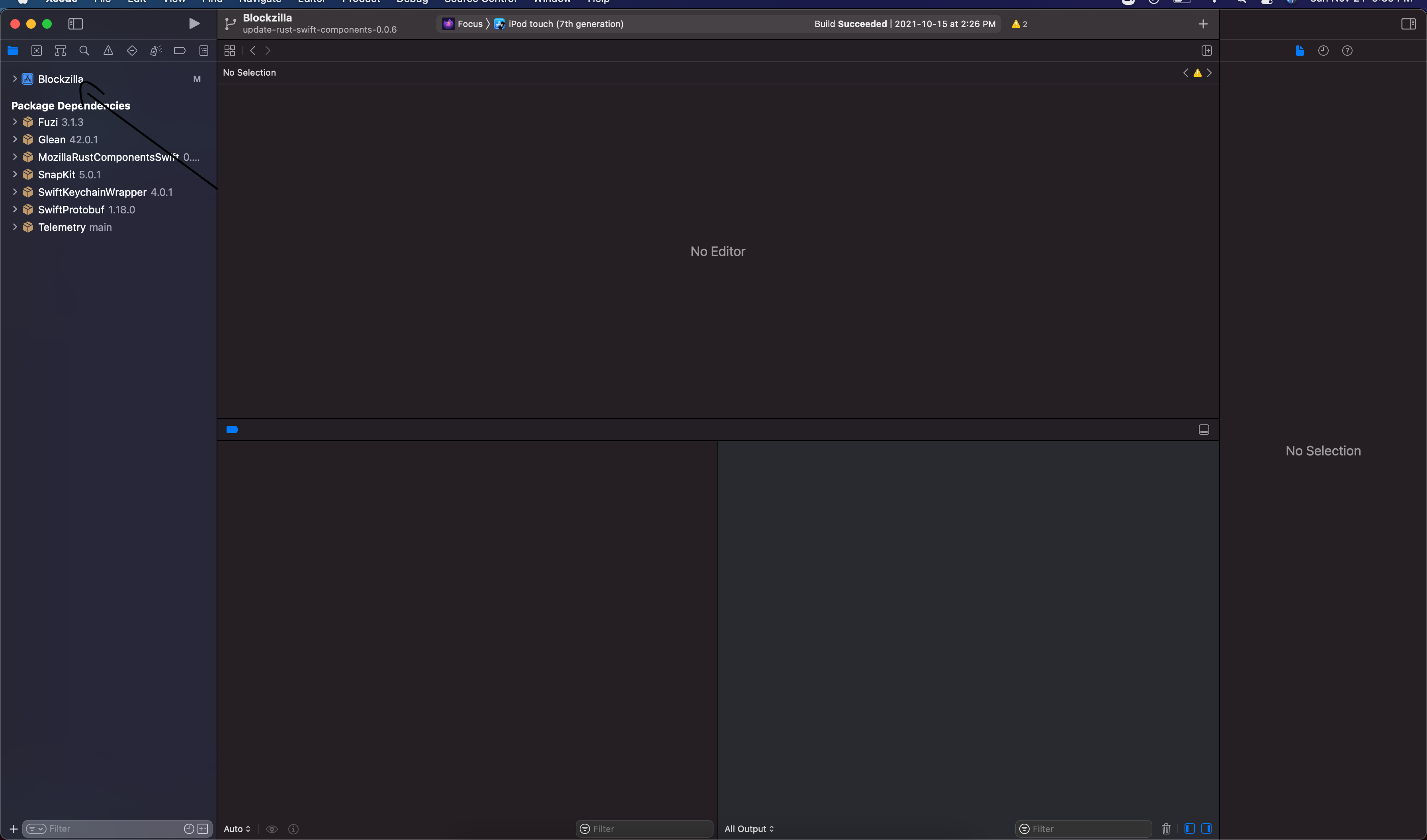

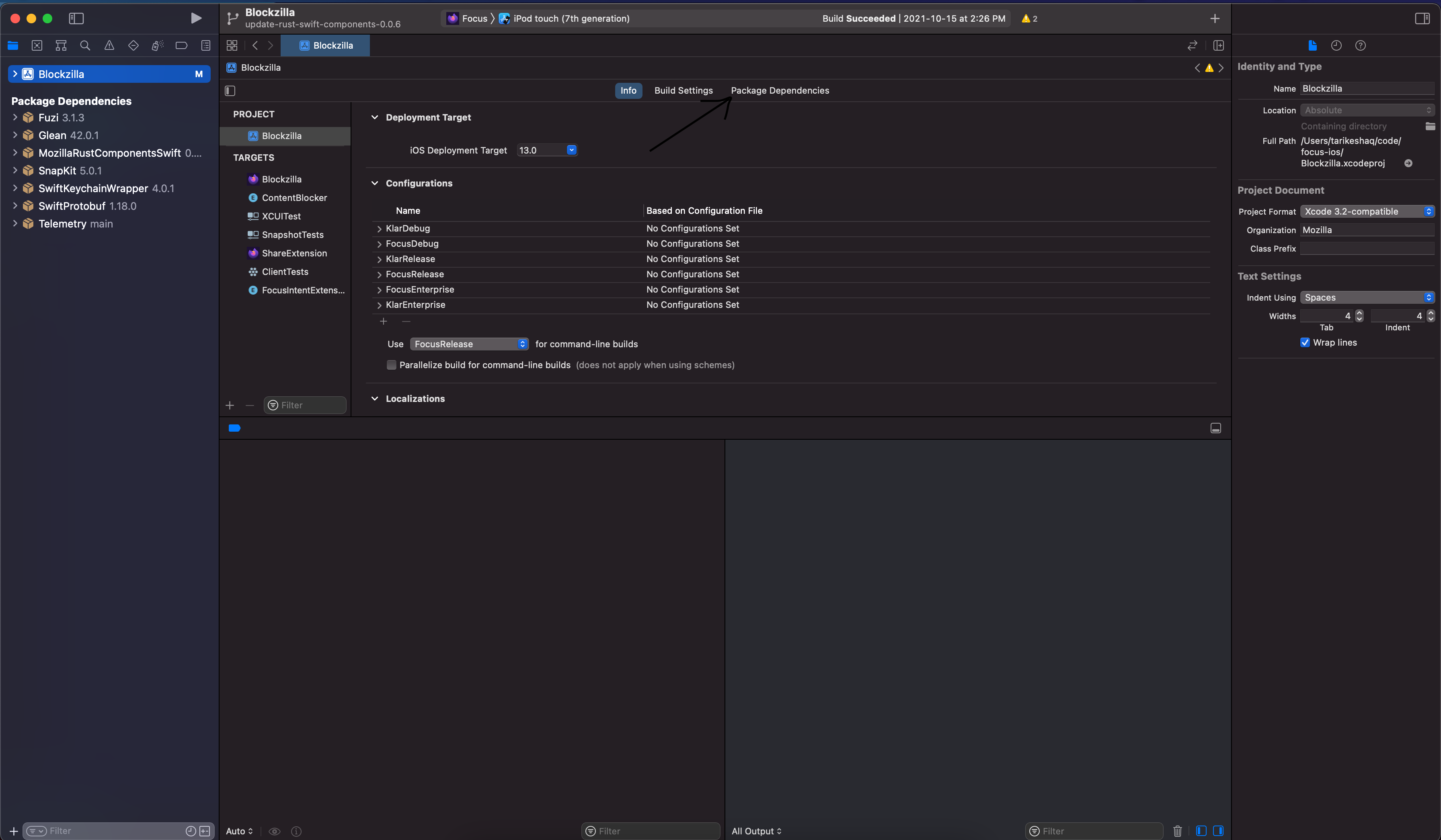

Open

Blockzilla.xcodeprojin Xcode -

Navigate to the Swift Packages in Xcode:

-

Remove the dependency on

rust-components-swiftas listed on Xcode, you can click the dependency then click the- -

Add a new swift package by clicking the

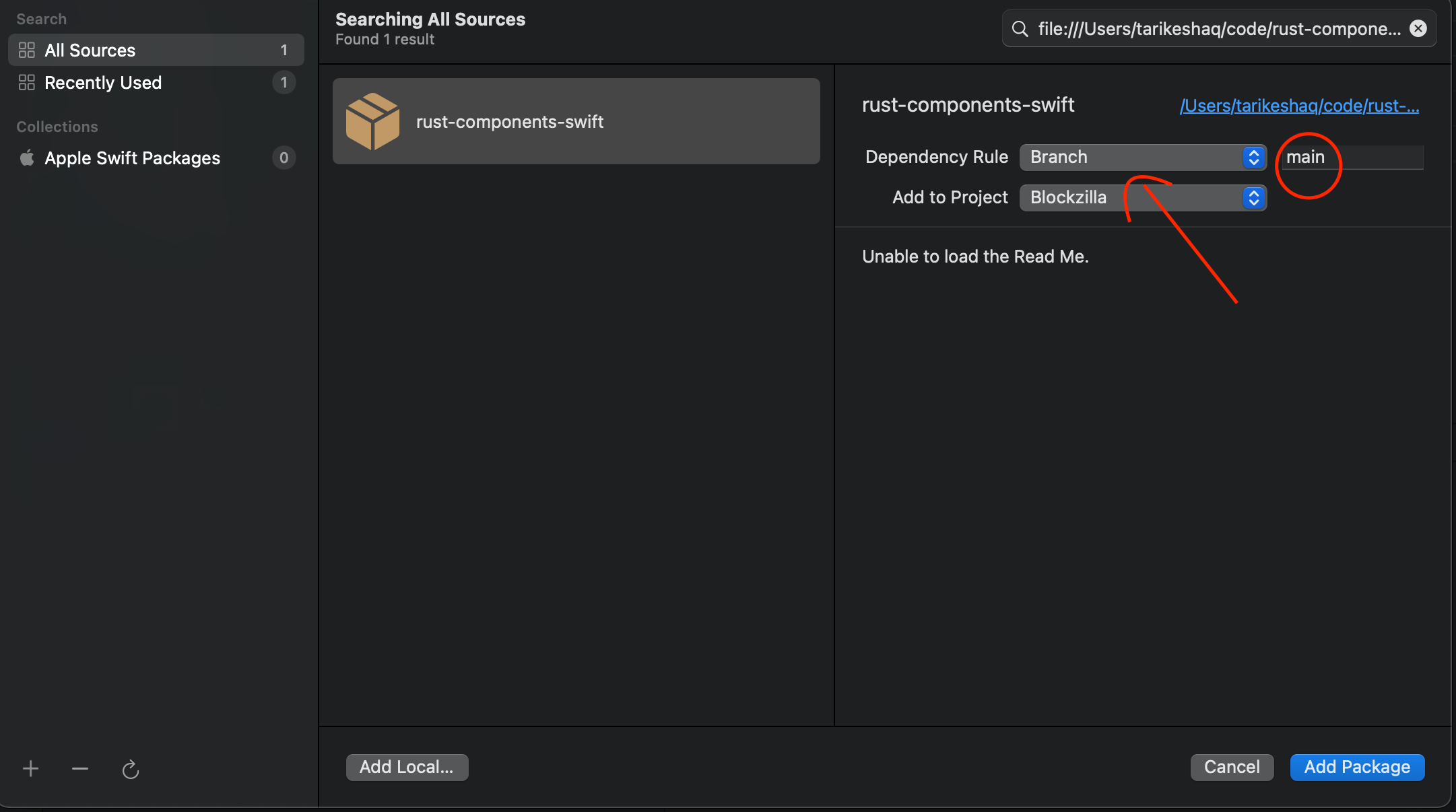

+:- On the top right, enter the full path to your

rust-components-swiftcheckout, preceded byfile://. If you don’t know what that is, runpwdin while inrust-components-swift. For example:file:///Users/tarikeshaq/code/rust-components-swift - Change the branch to be the checked-out branch of rust-component-swift you have locally. This is what the dialog should look like:

- Click

Add Package - Now include the

FocusAppServiceslibrary.

Note: If Xcode prevents you from adding the dependency to reference a local package, you will need to manually modify the

Blockzilla.xcodeproj/project.pbxprojand replace every occurrence ofhttps://github.com/mozilla/rust-components-swiftwith the full path to your local checkout. - On the top right, enter the full path to your

-

Finally, attempt to build focus, and if all goes well it should launch with your code. If you face any problems, feel free to contact us

Building and using a locally-modified version of JNA

Java Native Access is an important dependency for the Application Services components on Android, as it provides the low-level interface from the JVM into the natively-compiled Rust code.

If you need to work with a locally-modified version of JNA (e.g. to investigate an apparent JNA bug) then you may find these notes helpful.

The JNA docs do have an Android Development Environment guide that is a good starting point, but the instructions did not work for me and appear a little out of date. Here are the steps that worked for me:

-

Modify your environment to specify

$NDK_PLATFORM, and to ensure the Android NDK tools for each target platform are in your$PATH. On my Mac with Android Studio the config was as follows:export NDK_ROOT="$HOME/Library/Android/sdk/ndk/28.2.13676358" export NDK_PLATFORM="$NDK_ROOT/platforms/android-25" export PATH="$PATH:$NDK_ROOT/toolchains/llvm/prebuilt/darwin-x86_64/bin" export PATH="$PATH:$NDK_ROOT/toolchains/aarch64-linux-android-4.9/prebuilt/darwin-x86_64/bin" export PATH="$PATH:$NDK_ROOT/toolchains/arm-linux-androideabi-4.9/prebuilt/darwin-x86_64/bin" export PATH="$PATH:$NDK_ROOT/toolchains/x86-4.9/prebuilt/darwin-x86_64/bin" export PATH="$PATH:$NDK_ROOT/toolchains/x86_64-4.9/prebuilt/darwin-x86_64/bin"You will probably need to tweak the paths and version numbers based on your operating system and the details of how you installed the Android NDK.

-

Install the

antbuild tool (usingbrew install antworked for me). -

Checkout the JNA source from Github. Try doing a basic build via

ant distandant test. This won’t build for Android but will test the rest of the tooling. -

Adjust

./native/Makefilefor compatibility with your Android NSK install. Here’s what I had to do for mine:- Adjust the

$CCvariable to use clang instead of gcc:CC=aarch64-linux-android21-clang. - Adjust thd

$CCPvariable to use the version from your system:CPP=cpp. - Add

-landroid -llogto the list of libraries to link against in$LIBS.

- Adjust the

-

Build the JNA native libraries for the target platforms of interest:

ant -Dos.prefix=android-aarch64ant -Dos.prefix=android-armv7ant -Dos.prefix=android-x86-64

-

Package the newly-built native libraries into a JAR/AAR using

ant dist. This should produce./dist/jna.aar. -

Configure

build.gradlefor the consuming application to use the locally-built JNA artifact:// Tell gradle where to look for local artifacts. repositories { flatDir { dirs "/PATH/TO/YOUR/CHECKOUT/OF/jna/dist" } } // Tell gradle to exclude the published version of JNA. configurations { implementation { exclude group: "net.java.dev.jna", module:"jna" } } // Take a direct dependency on the local JNA AAR. dependencies { implementation name: "jna", ext: "aar" } -

Rebuild and run your consuming application, and it should be using the locally-built JNA!

If you’re trying to debug some unexpected JNA behaviour (and if you favour old-school printf-style debugging) then you can this code snippet to print to the Android log from the compiled native code:

#ifdef __ANDROID__

#include <android/log.h>

#define HACKY_ANDROID_LOG(...) __android_log_print(ANDROID_LOG_VERBOSE, "HACKY-DEBUGGING-FOR-ALL", __VA_ARGS__)

#else

#define HACKY_ANDROID_LOG(MSG)

#endif

HACKY_ANDROID_LOG("this will go to the android logcat output");

HACKY_ANDROID_LOG("it accepts printf-style format sequences, like this: %d", 42);

Branch builds

Branch builds are a way to build and test Fenix using branches from application-services and firefox-android.

iOS is not currently supported, although we may add it in the future (see #4966).

Application-services nightlies

When we make non-breaking changes, we typically merge them into main and let them sit there until the next release. In

order to check that the current main really does only have non-breaking changes, we run a nightly branch build from the

main branch of application-services,

- To view the latest branch builds:

- Open the latest decision task from the task index.

- Click the “View Task” link

- Click “Task Group” in the top-left

- You should now see a list of tasks from the latest nightly

*-buildwere for building the application. A failure here indicates there’s probably a breaking change that needs to be resolved.- To get the APK, navigate to

branch-build-fenix-buildand downloadapp-x86-debug.apkfrom the artifacts list branch-build-ac-test.*are the android-components tests tasks. These are split up by gradle project, which matches how the android-components CI handles things. Running all the tests together often leads to failures.branch-build-fenix-testis the Fenix tests. These are not split up per-project.

- These builds are triggered by our .cron.yml file

Guide to Testing a Rust Component

This document gives a high-level overview of how we test components in application-services.

It will be useful to you if you’re adding a new component, or working on increasing the test

coverage of an existing component.

If you are only interested in running the existing test suite, please consult the contributor docs and the tests.py script.

Unit and Functional Tests

Rust code

Since the core implementations of our components live in rust, so does the core of our testing strategy.

Each rust component should be accompanied by a suite of unit tests, following the guidelines for writing tests from the Rust Book. Some additional tips:

-

Where possible, it’s better use use the Rust typesystem to make bugs impossible than to write tests to assert that they don’t occur in practice. But given that the ultimate consumers of our code are not in Rust, that’s sometimes not possible. The best idiomatic Rust API for a feature is not necessarily the best API for consuming it over an FFI boundary.

-

Rust’s builtin assertion macros are sparse; we use the more_asserts for some additional helpers.

-

Rust’s strict typing can make test mocks difficult. If there’s something you need to mock out in tests, make it a Trait and use the mockiato crate to mock it.

The Rust tests for a component should be runnable via cargo test.

FFI Layer code

We are currently using uniffi to generate most ((and soon all!) of our FFI code and thus the FFI code itself does not need to be extensively tested.

Kotlin code

The Kotlin wrapper code for a component should have its own test suite, which should follow the general guidelines for testing Android code in Mozilla projects. In practice that means we use JUnit as the test framework and Robolectric to provide implementations of Android-specific APIs.

The Kotlin tests for a component should be runnable via ./gradlew <component>:test.

The tests at this layer are designed to ensure that the API binding code is working as intended, and should not repeat tests for functionality that is already well tested at the Rust level. But given that the Kotlin bindings involve a non-trivial amount of hand-written boilerplate code, it’s important to exercise that code thoroughly.

One complication with running Kotlin tests is that the code needs to run on your local development machine,

but the Kotlin code’s native dependencies are typically compiled and packaged for Android devices. The

tests need to ensure that an appropriate version of JNA and of the compiled Rust code is available in

their library search path at runtime. Our build.gradle files contain a collection of hackery that ensures

this, which should be copied into any new components.

The majority of our Kotlin bindings are autogenerated using uniffi and do not need extensive testing.

Swift code

The Swift wrapper code for a component should have its own test suite, using Apple’s Xcode unittest framework.

Due to the way that all rust components need to be compiled together into a single “megazord”

framework, this entire repository is a single Xcode project. The Swift tests for each component

thus need to live under megazords/ios-rust/MozillaTestServicesTests/ rather than in the directory

for the corresponding component. (XXX TODO: is this true? it would be nice to find a way to avoid having

them live separately because it makes them easy to overlook).

The tests at this layer are designed to ensure that the API binding code is working as intended, and should not repeat tests for functionality that is already well tested at the Rust level. But given that the Swift bindings involve a non-trivial amount of hand-written boilerplate code, it’s important to exercise that code thoroughly.

The majority of our Swift bindings are autogenerated using uniffi and do not need extensive testing.

Integration tests

End-to-end Sync Tests

⚠️ Those tests were disabled because of how flakey the stage server was. See #3909 ⚠️

The testing/sync-test directory contains a test harness for running sync-related

Rust components against a live Firefox Sync infrastructure, so that we can verifying the functionality

end-to-end.

Each component that implements a sync engine should have a corresponding suite of tests in this directory.

- XXX TODO: places doesn’t.

- XXX TODO: send-tab doesn’t (not technically a sync engine, but still, it’s related)

- XXX TODO: sync-manager doesn’t

Android Components Test Suite

It’s important that changes in application-services are tested against upstream consumer code in the

android-components repo. This is currently

a manual process involving:

- Configuring your local checkout of android-components to use your local application-services build.

- Running the android-components test suite via

./gradle test. - Manually building and running the android-components sample apps to verify that they’re still working.

Ideally some or all of this would be automated and run in CI, but we have not yet invested in such automation.

Ideas for Improvement

- ASan, Memsan, and maybe other sanitizer checks, especially around the points where we cross FFI boundaries.

- General-purpose fuzzing, such as via https://github.com/jakubadamw/arbitrary-model-tests

- We could consider making a mocking backend for viaduct, which would also be mockable from Kotlin/Swift.

- Add more end-to-end integration tests!

- Live device tests, e.g. actual Fenixes running in an emulator and syncing to each other.

- Run consumer integration tests in CI against main.

Smoke testing Application Services against end-user apps

This is a great way of finding integration bugs with application-services.

The testing can be done manually using substitution scripts, but we also have scripts that will do the smoke-testing for you.

Dependencies

Run pip3 install -r automation/requirements.txt to install the required Python packages.

Android Components

The automation/smoke-test-android-components.py script will clone (or use a local version) of

android-components and run a subset of its tests against the current application-services worktree.

It tries to only run tests that might be relevant to application-services functionality.

Fenix

The automation/smoke-test-fenix.py script will clone (or use a local version) of Fenix and

run tests against the current application-services worktree.

Firefox iOS

The automation/smoke-test-fxios.py script will clone (or use a local version) of Firefox iOS and

run tests against the current application-services worktree.

Testing faster: How to avoid making compile times worse by adding tests

Background

We’d like to keep cargo test, cargo build, cargo check, … reasonably

fast, and we’d really like to keep them fast if you pass -p for a specific

project. Unfortunately, there are a few ways this can become unexpectedly slow.

The easiest of these problems for us to combat at the moment is the unfortunate

placement of dev-dependencies in our build graph.

If you perform a cargo test -p foo, all dev-dependencies of foo must be

compiled before foo’s tests can start. This includes dependencies only used

non-test targets, such as examples or benchmarks.

In an ideal world, cargo could run your tests as soon as it finished with the dependencies it needs for those tests, instead of waiting for your benchmark suite, or the arg-parser your examples use, or etc.

Unfortunately, all cargo knows is that these are dev-dependencies, and not

which targets actually use them.

Additionally, unqualified invocations of cargo (that is, without -p) might

have an even worse time if we aren’t careful. If I run, cargo test, cargo

knows every crate in the workspace needs to be built with all dev

dependencies, if places depends on fxa-client, all of fxa-clients

dev-dependencies must be compiled, ready, and linked in at least to the lib

target before we can even think about starting on places.

We have not been careful about what shape the dependency graph ends up as when example code is taken into consideration (as it is by cargo during certain builds), and as a result, we have this problem. Which isn’t really a problem we want to fix: Example code can and should depend on several different components, and use them together in interesting ways.

So, because we don’t want to change what our examples do, or make major architectural changes of the non-test code for something like this, we need to do something else.

The Solution

To fix this, we manually insert “cuts” into the dependency graph to help cargo out. That is, we pull some of these build targets (e.g. examples, benchmarks, tests if they cause a substantial compile overhead) into their own dedicated crates so that:

- They can be built in parallel with each other.

- Crates depending on the component itself are not waiting on the test/bench/example build in order for their test build to begin.

- A potentially smaller set of our crates need to be rebuilt – and a smaller set of possible configurations exist meaning fewer items to add pressure to caches.

- …

Some rules of thumb for when / when not to do this:

-

All rust examples should be put in

examples/*. -

All rust benchmarks should be put in

testing/separated/*. See the section below on how to set your benchmark up to avoid redundant compiles. -

Rust tests which brings in heavyweight dependencies should be evaluated on an ad-hoc basis. If you’re concerned, measure how long compilation takes with/without, and consider how many crates depend on the crate where the test lives (e.g. a slow test in support/foo might be far worse than one in a leaf crate), etc…

Appendix: How to avoid redundant compiles for benchmarks and integration tests

To be clear, this is way more important for benchmarks (which always compile as release and have a costly link phase).

Say you have a directory structure like the following:

mycrate

├── src

│ └── lib.rs

| ...

├── benches

│ ├── bench0.rs

| ├── bench1.rs

│ └── bench2.rs

├── tests

│ ├── test0.rs

| ├── test1.rs

│ └── test2.rs

└── ...

When you run your integration tests or benchmarks, each of test0, test1,

test2 or bench0, bench1, bench2 is compiled as it’s own crate that runs

the tests in question and exits.

That means 3 benchmark executables are built on release settings, and 3 integration test executables.

If you’ve ever tried to add a piece of shared utility code into your integration

tests, only to have cargo (falsely) complain that it is dead code: this is why.

Even if test0.rs and test2.rs both use the utility function, unless

every test crate uses every shared utility, the crate that doesn’t will

complain.

(Aside: This turns out to be an unintentional secondary benefit of this approach

– easier shared code among tests, without having to put a

#![allow(dead_code)] in your utils.rs. We haven’t hit that very much here,

since we tend to stick to unit tests, but it came up in mentat several times,

and is a frequent complaint people have)

Anyway, the solution here is simple: Create a new crate. If you were working in

components/mycrate and you want to add some integration tests or benchmarks,

you should do cargo new --lib testing/separated/mycrate-test (or

.../mycrate-bench).

Delete .../mycrate-test/src/lib.rs. Yep, really, we’re making a crate that

only has integration tests/benchmarks (See the “FAQ0” section at the bottom of

the file if you’re getting incredulous).

Now, add a src/tests.rs or a src/benches.rs. This file should contain mod foo; declarations for each submodule containing tests/benchmarks, if any.

For benches, this is also where you set up the benchmark harness (refer to benchmark library docs for how).

Now, for a test, add: into your Cargo.toml

[[test]]

name = "mycrate-test"

path = "src/tests.rs"

and for a benchmark, add:

[[bench]]

name = "mycrate-benches"

path = "src/benches.rs"

harness = false

Because we aren’t using src/lib.rs, this is what declares which file is the

root of the test/benchmark crate. Because there’s only one target (unlike with

tests/* / benches/* under default settings), this will compile more quickly.

Additionally, src/tests.rs and src/benches.rs will behave like a normal

crate, the only difference being that they don’t produce a lib, and that they’re

triggered by cargo test/cargo run respectively.

FAQ0: Why put tests/benches in src/* instead of disabling autotests/autobenches

Instead of putting tests/benchmarks inside src, we could just delete the src

dir outright, and place everything in tests/benches.

Then, to get the same one-rebuild-per-file behavior that we’ll get in src, we

need to add autotests = false or autobenches = false to our Cargo.toml,

adding a root tests/tests.rs (or benches/benches.rs) containing mod decls

for all submodules, and finally by referencing that “root” in the Cargo.toml

[[tests]] / [[benches]] list, exactly the same way we did for using src/*.

This would work, and on the surface, using tests/*.rs and benches/*.rs seems

more consistent, so it seems weird to use src/*.rs for these files.

My reasoning is as follows: Almost universally, tests/*.rs, examples/*.rs,

benches/*.rs, etc. are automatic. If you add a test into the tests folder, it

will run without anything else.

If we’re going to set up one-build-per-{test,bench}suite as I described, this

fundamentally cannot be true. In this paradigm, if you add a test file named

blah.rs, you must add a mod blah it to the parent module.

It seems both confusing and error-prone to use tests/*, but have it behave

that way, however this is absolutely the normal behavior for files in src/*.rs

– When you add a file, you then need to add it to it’s parent module, and this

is something Rust programmers are pretty used to.

(In fact, we even replicated this behavior (for no reason) in the places

integration tests, and added the mod declarations to a “controlling” parent

module – It seems weird to be in an environment where this isn’t required)

So, that’s why. This way, we make it way less likely that you add a test file

to some directory, and have it get ignored because you didn’t realize that in

this one folder, you need to add a mod mytest into a neighboring tests.rs.

Debugging Sql

It can be quite tricky to debug what is going on with sql statement, especially once the sql gets complicated or many triggers are involved.

The sql_support create provides some utilities to help. Note that

these utilities are gated behind a debug-tools feature. The module

provides docstrings, so you should read them before you start.

This document describes how to use these capabilities and we’ll use places

as an example.

First, we must enable the feature:

--- a/components/places/Cargo.toml

+++ b/components/places/Cargo.toml

@@ -22,7 +22,7 @@ lazy_static = "1.4"

url = { version = "2.1", features = ["serde"] }

percent-encoding = "2.1"

caseless = "0.2"

-sql-support = { path = "../support/sql" }

+sql-support = { path = "../support/sql", features=["debug-tools"] }

and we probably need to make the debug functions available:

--- a/components/places/src/db/db.rs

+++ b/components/places/src/db/db.rs

@@ -108,6 +108,7 @@ impl ConnectionInitializer for PlacesInitializer {

";

conn.execute_batch(initial_pragmas)?;

define_functions(conn, self.api_id)?;

+ sql_support::debug_tools::define_debug_functions(conn)?;

We now have a Rust function print_query() and a SQL function dbg() available.

Let’s say we were trying to debug a test such as test_bookmark_tombstone_auto_created.

We might want to print the entire contents of a table, then instrument a query to check

what the value of a query is. We might end up with a patch something like:

index 28f19307..225dccbb 100644

--- a/components/places/src/db/schema.rs

+++ b/components/places/src/db/schema.rs

@@ -666,7 +666,8 @@ mod tests {

[],

)

.expect("should insert regular bookmark folder");

- conn.execute("DELETE FROM moz_bookmarks WHERE guid = 'bookmarkguid'", [])

+ sql_support::debug_tools::print_query(&conn, "select * from moz_bookmarks").unwrap();

+ conn.execute("DELETE FROM moz_bookmarks WHERE dbg('CHECKING GUID', guid) = 'bookmarkguid'", [])

.expect("should delete");

// should have a tombstone.

assert_eq!(

There are 2 things of note:

- We used the

print_queryfunction to dump the entiremoz_bookmarkstable before executing the query. - We instrumented the query to print the

guidevery time sqlite reads a row and compares it against a literal.

The output of this test now looks something like:

running 1 test

query: select * from moz_bookmarks

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| id | fk | type | parent | position | title | dateAdded | lastModified | guid | syncStatus | syncChangeCounter |

+====+======+======+========+==========+=========+===============+===============+==============+============+===================+

| 1 | null | 2 | null | 0 | root | 1686248350470 | 1686248350470 | root________ | 1 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| 2 | null | 2 | 1 | 0 | menu | 1686248350470 | 1686248350470 | menu________ | 1 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| 3 | null | 2 | 1 | 1 | toolbar | 1686248350470 | 1686248350470 | toolbar_____ | 1 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| 4 | null | 2 | 1 | 2 | unfiled | 1686248350470 | 1686248350470 | unfiled_____ | 1 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| 5 | null | 2 | 1 | 3 | mobile | 1686248350470 | 1686248350470 | mobile______ | 1 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

| 6 | null | 3 | 1 | 0 | null | 1 | 1 | bookmarkguid | 2 | 1 |

+----+------+------+--------+----------+---------+---------------+---------------+--------------+------------+-------------------+

test db::schema::tests::test_bookmark_tombstone_auto_created ... FAILED

failures:

---- db::schema::tests::test_bookmark_tombstone_auto_created stdout ----

CHECKING GUID root________

CHECKING GUID menu________

CHECKING GUID toolbar_____

CHECKING GUID unfiled_____

CHECKING GUID mobile______

CHECKING GUID bookmarkguid

It’s unfortunate that the output of print_table() goes to the tty while the output of dbg goes to stderr, so

you might find the output isn’t quite intermingled as you would expect, but it’s better than nothing!

Dependency Management Guidelines

This repository uses third-party code from a variety of sources, so we need to be mindful of how these dependencies will affect our consumers. Considerations include:

- General code quality.

- Licensing compatibility.

- Handling of security vulnerabilities.

- The potential for supply-chain compromise.

We’re still evolving our policies in this area, but these are the guidelines we’ve developed so far.

Rust Code

Unlike Firefox,

we do not vendor third-party source code directly into the repository. Instead we rely on

Cargo.lock and its hash validation to ensure that each build uses an identical copy

of all third-party crates. These are the measures we use for ongoing maintence of our

existing dependencies:

- Check

Cargo.lockinto the repository. - Generate built artifacts using the

--lockedflag tocargo build, as an additional assurance that the existingCargo.lockwill be respected. - Regularly run cargo-audit in CI to alert us to

security problems in our dependencies.

- It runs on every PR, and once per hour on the

mainbranch

- It runs on every PR, and once per hour on the

- Use a home-grown tool to generate a summary of dependency licenses

and to check them for compatibility with MPL-2.0.

- Check these summaries into the repository and have CI alert on unexpected changes, to guard against pulling in new versions of a dependency under a different license.

Adding a new dependency, whether we like it or not, is a big deal - that dependency and everything it brings with it will become part of Firefox-branded products that we ship to end users. We try to balance this responsibility against the many benefits of using existing code, as follows:

- In general, be conservative in adding new third-party dependencies.

- For trivial functionality, consider just writing it yourself. Remember the cautionary tale of left-pad.

- Check if we already have a crate in our dependency tree that can provide the needed functionality.

- Prefer crates that have a a high level of due-diligence already applied, such as:

- Crates that are already vendored into Firefox.

- Crates from rust-lang-nursery.

- Crates that appear to be widely used in the rust community.

- Check that it is clearly licensed and is MPL-2.0 compatible.

- Take the time to investigate the crate’s source and ensure it is suitably high-quality.

- Be especially wary of uses of

unsafe, or of code that is unusually resource-intensive to build. - Dev dependencies do not require as much scrutiny as dependencies that will ship in consuming applications,

but should still be given some thought.

- There is still the potential for supply-chain compromise with dev dependencies!

- Be especially wary of uses of

- As part of the PR that introduces the new dependency:

- Regenerate dependency summary files using the regenerate_dependency_summaries.sh.

- Explicitly describe your consideration of the above points.

Updating to new versions of existing dependencies is a normal part of software development and is not accompanied by any particular ceremony.

Android/Kotlin Code

We currently depend only on the following Kotlin dependencies:

We currently depend on the following developer dependencies in the Kotlin codebase, but they do not get included in built distribution files:

- detekt

- ktlint

No additional Kotlin dependencies should be added to the project unless absolutely necessary.

iOS/Swift Code

We currently do not depend on any Swift dependencies. And no Swift dependencies should be added to the project unless absolutely necessary.

Other Code

We currently depend on local builds of the following system dependencies:

No additional system dependencies should be added to the project unless absolutely necessary.

Adding a new component to Application Services

This is a rapid-fire list for adding a component from scratch and generating Kotlin/Swift bindings.

The Rust Code

Your component should live under ./components in this repo.

Use cargo new --lib ./components/<your_crate_name>to create a new library crate.

See the Guide to Building a Rust Component for general advice on designing and structuring the actual Rust code, and follow the Dependency Management Guidelines if your crate introduces any new dependencies.

Use UniFFI to define how your crate’s

API will get exposed to foreign-language bindings. Lookup the installed uniffi

version on other packages (eg grep uniffi components/init_rust_components/Cargo.toml) and use the same version. Place

the following in your Cargo.toml:

[dependencies]

uniffi = { version = "<current uniffi version>" }

New components should prefer using the

proc-macro approach rather than

a UDL file based approach. If you do use a UDL file, add this to Cargo.toml as well.

[build-dependencies]

uniffi = { version = "<current uniffi version>" }

Include your new crate in the application-services workspace, by adding

it to the members and default-members lists in the Cargo.toml at

the root of the repository.

Run cargo check -p <your_crate_name> in the repository root to confirm that

things are configured properly. This will also have the side-effect of updating

Cargo.lock to contain your new crate and its dependencies.

The Android Bindings

Run the cargo start-bindings android <your_crate_name> <component_description> command to auto-generate the initial code. Follow the directions in the output.

You will end up with a directory structure something like this:

components/<your_crate_name>/Cargo.tomluniffi.tomlsrc/- Rust code here.

android/build.gradlesrc/main/AndroidManifest.xml

Dependent crates

If your crate uses types from another crate in it’s public API, you need to include a dependency for

the corresponding project in your android/build.gradle file.

For example, suppose use the remote_settings::RemoteSettingsServer type in your public API so that

consumers can select which server they want. In that case, you need to a dependency on the

remotesettings project:

dependencies {

api project(":remotesettings")

}

Async dependencies

If your components exports async functions, add the following to your android/build.gradle file:

dependencies {

implementation libs.kotlin.coroutines

}

Hand-written code

You can include hand-written Kotlin code alongside the automatically generated bindings, by placing `.kt`` files in a directory named:

./android/src/test/java/mozilla/appservices/<your_crate_name>/

You can write Kotlin-level tests that consume your component’s API, by placing `.kt`` files in a directory named:

./android/src/test/java/mozilla/appservices/<your_crate_name>/.

You can run the tests with ./gradlew <your_crate_name>:test

The iOS Bindings

- Run the

cargo start-bindings ios <your_crate_name>command to auto-generate the initial code - Run

cargo start-bindings ios-focus <your_crate_name>if you also want to expose your component to Focus. - Follow the directions in the output.

You will end up with a directory structure something like this:

components/<your_crate_name>/Cargo.tomluniffi.tomlsrc/- Rust code here.

Adding your component to the Swift Package Megazord

For more information on our how we ship components using the Swift Package Manager, check the ADR that introduced the Swift Package Manager

Add your component into the iOS “megazord” through the local Swift Package Manager (SPM) package MozillaRustComponentsWrapper. Note this SPM is for ease of testing APIs locally. The official SPM that is consumed by firefox-ios is a local package in their repo.

- Place your Swift test code in:

megazords/ios-rust/tests/MozillaRustComponentsWrapper/

Note: swift-specific tests are ideally suited in the consuming app as there is better integration coverage and ensures we’re accurately testing how it’s being consumed

That’s it! At this point, if you don’t intend on writing tests (are you sure?) you can skip this next section.

Writing and Running Tests

The current system combines all rust crates into one binary (megazord). To use your rust APIs simply import the local SPM into your tests:

@testable import MozillaRustComponentsWrapper

To test your component:

- Run the script:

./automation/build_ios_artifacts.sh

The script will:

- Build the XCFramework (combines all rust binaries for SPM)

- Generate UniFFi bindings (generated files will be found in

megazords/ios-rust/sources/MozillaRustComponentsWrapper/Generated/) - Generate Glean metrics

- Run any tests found in the test dir mentioned above

Distribution of the component

The UniFFi files will be generated & packaged via taskcluster/scripts/build-and-test-swift.py.

Consumers (eg: Firefox iOS) pull the latest artifacts via a nightly Gitub action. Once your changes are pulled via a nightly release you’ll be able to use your new APIs!

Note: If you don’t want to wait for a nightly, once the CI finishes your build – you can request the action run in firefox-ios to get it sooner

Note pt2: If you want to locally test against firefox-ios, follow this guide

Guide to Building a Syncable Rust Component

This is a guide to creating a new Syncable Rust Component like many of the components in this repo. If you are looking for information how to build (ie,compile, etc) the existing components, you are looking for our build documentation

Welcome!

It’s great that you want to build a Rust Component - this guide should help get you started. It documents some nomenclature, best-practices and other tips and tricks to get you started.

This document is just for general guidance - every component will be different and we are still learning how to make these components. Please update this document with these learnings.

To repeat with emphasis - please consider this a living document.

General design and structure of the component

We think components should be structured as described here.

We build libraries, not frameworks

Think of building a “library”, not a “framework” - the application should be in control and calling functions exposed by your component, not providing functions for your component to call.

The “store” is the “entry-point”

[Note that some of the older components use the term “store” differently; we

should rename them! In Places, it’s called an “API”; in Logins an “engine”. See

webext-storage for a more recent component that uses the term “Store” as we

think it should be used.]

The “Store” is the entry-point for the consuming application - it provides the core functionality exposed by the component and manages your databases and other singletons. The responsibilities of the “Store” will include things like creating the DB if it doesn’t exist, doing schema upgrades etc.

The functionality exposed by the “Store” will depend on the complexity of the

API being exposed. For example, for webext-storage, where there are only a

handful of simple public functions, it just directly exposes all the

functionality of the component. However, for Places, which has a much more

complex API, the (logical) Store instead supplies “Connection” instances which

expose the actual functionality.

Using sqlite

We prefer sqlite instead of (say) JSON files or RKV.

Always put sqlite into WAL mode, then have exactly 1 writer connection and as

many reader connections you need - which will depend on your use-case - for

example, webext_storage has 1 reader, while places has many.

(Note that places has 2 writers (one for sync, one for the api), but we believe this was a mistake and should have been able to make things work better with exactly 1 shared between sync and the api)

We typically have a “DB” abstraction which manages the database itself - the logic for handling schema upgrades etc and enforcing the “only 1 writer” rule is done by this.

However, this is just a convenience - the DB abstractions aren’t really passed

around - we just pass raw connections (or transactions) around. For example, if

there’s a utility function that reads from the DB, it will just have a Rusqlite

connection passed. (Again, older components don’t really do this well, but

webext-storage does)

We try and leverage rust to ensure transactions are enforced at the correct

boundaries - for example, functions which write data but which must be done as

part of a transaction will accept a Rusqlite Transaction reference as the

param, whereas something that only reads the Db will accept a Rusqlite

Connection - note that because Transaction supports

Deref<Target = Connection>, you can pass a &Transaction wherever a

&Connection is needed - but not vice-versa.

Meta-data

You are likely to have a table just for key/value metadata, and this table will be used by sync (and possibly other parts of the component) to track the sync IDs, lastModified timestamps etc.

Schema management

The schemas are stored in the tree in .sql files and pulled into the source at

build time via include_str!. Depending on the complexity of your component,

there may be a need for different Connections to have different Sql (for

example, it may be that only your ‘write’ connection requires the sql to define

triggers or temp tables, so these might be in their own file.)

Because requirements evolve, there will be a need to support schema upgrades.

This is done by way of sqlite’s PRAGMA user_version - which can be thought of

as simple metadata for the database itself. In short, immediately after opening

the database for the first time, we check this version and if it’s less than

expected we perform the schema upgrades necessary, then re-write the version

to the new version.

This is easier to read than explain, so read the upgrade() function in

the Places schema code

You will need to be a big careful here because schema upgrades are going to block the calling application immediately after they upgrade to a new version, so if your schema change requires a table scan of a massive table, you are going to have a bad time. Apart from that though, you are largely free to do whatever sqlite lets you do!

Note that most of our components have very similar schema and database management code - these are screaming out to be refactored so common logic can be shared. Please be brave and have a go at this!

Triggers

We tend to like triggers for encompassing application logic - for example, if

updating one row means a row in a different table should be updated based on

that data, we’d tend to prefer an, eg, AFTER UPDATE trigger than having our

code manually implement the logic.

However, you should take care here, because functionality based on triggers is difficult to debug (eg, logging in a trigger is difficult) and the functionality can be difficult to locate (eg, users unfamiliar with the component may wonder why they can’t find certain functionity in the rust code and may not consider looking in the sqlite triggers)

You should also be careful when creating triggers on persistent main tables. For example, bumping the change counter isn’t a good use for a trigger, because it’ll run for all changes on the table—including those made by Sync. This means Sync will end up tracking its own changes, and getting into infinite syncing loops. Triggers on temporary tables, or ones that are used for bookkeeping where the caller doesn’t matter, like bumping the foreign reference count for a URL, are generally okay.

General structure of the rust code

We prefer flatter module hierarchies where possible. For example, in Places

we ended up with sync_history and sync_bookmarks sub-modules rather than

a sync submodule itself with history and bookmarks.

Note that the raw connections are never exposed to consumers - for example, they will tend to be stored as private fields in, eg, a Mutex.

Syncing

The traits you need to implement to sync aren’t directly covered here.

All meta-data related to sync must be stored in the same database as the

data itself - often in a meta table.

All logic for knowing which records need to be sync must be part of the

application logic, and will often be implemented using triggers. It’s quite

common for components to use a “change counter” strategy, which can be

summarized as:

-

Every table which defines the “top level” items being synced will have a column called something like ‘sync_change_counter’ - the app will probably track this counter manually instead of using a trigger, because sync itself will need different behavior when it updates the records.

-

At sync time, items with a non-zero change counter are candidates for syncing.

-

As the sync starts, for each item, the current value of the change counter is remembered. At the end of the sync, the counter is decremented by this value. Thus, items which were changed between the time the sync started and completed will be left with a non-zero change counter at the end of the sync.

Syncing FAQs

This section is stolen from this document

What’s the global sync ID and the collection sync ID?

Both guids, both used to identify when the data in the server has changed radically underneath us (eg, when looking at lastModified is no longer a sane thing to do.)

The “global sync ID” changing means that every collection needs to be assumed as having changed radically, whereas just the “collection sync ID” changing means just that one collection.

These global IDs are most likely to change on a node reassignment (which should be rare now with durable storage), a password reset, etc. An example of when the collection ID will change is a “bookmarks restore” - handling an old version of a database re-appearing is why we store these IDs in the database itself.

What’s get_sync_assoc, why is it important? What is StoreSyncAssociation?

They are all used to track the guids above. It’s vitally important we know when these guids change.

StoreSyncAssociation is a simple enum which reflects the state a sync engine

can be in - either Disconnected (ie, we have no idea what the GUIDs are) or

Connected where we know what we think the IDs are (but the server may or may

not match with this)

These GUIDs will typically be stored in the DB in the metadata table.

what is apply_incoming versus sync_finished

apply_incoming is where any records incoming from the server (ie, possibly

all records on the server if this is a first-sync, records with a timestamp

later than our last sync otherwise) are processed.

sync_finished is where we’ve done all the sync work other than uploading new

changes to the server.

What’s the diff between reset and wipe?

- Reset means “I don’t know what’s on the server - I need to reconcile everything there with everything I have”. IOW, a “first sync”

- Wipe means literally “wipe all server data”

Exposing to consumers

You will need an FFI or some other way of exposing stuff to your consumers.

We use a tool called UniFFI to automatically generate FFI bindings from the Rust code.

If UniFFI doesn’t work for you, then you’ll need to hand-write the FFI layer. Here are some earlier blog posts on the topic which might be helpful:

- Building and Deploying a Rust library on Android

- Building and Deploying a Rust library on iOS

- Blog post re: lessons in binding to Rust code from iOS

The above are likely to be superseded by uniffi docs, but for now, good luck!

Naming Conventions

All names in this project should adhere to the guidelines outlined in this document.

Rust Code

TL;DR: do what Rust’s builtin warnings and clippy lints tell you (and CI will fail if there are any unresolved warnings or clippy lints).

Overview

-

All variable names, function names, module names, and macros in Rust code should follow typical

snake_caseconventions. -

All Rust types, traits, structs, and enum variants must follow

UpperCamelCase. -

Static and constant variables should be written in

SCREAMING_SNAKE_CASE. s

For more in-depth Rust conventions, see the Rust Style Guide.

Examples:

#![allow(unused)]

fn main() {

fn sync15_passwords_get_all()

struct PushConfiguration{...}

const COMMON_SQL

}Swift Code

Overview

-

Names of types and protocols are

UpperCamelCase. -

All other uses are

lowerCamelCase.

For more in-depth Swift conventions, check out the Swift API Design Guidelines.

Examples:

enum CheckChildren{...}

func checkTree()

public var syncKey: String

Kotlin Code

If a source file contains only a top-level class, the source file should reflect the case-sensitive name of the class plus the .kt extension. Otherwise, if the source contains multiple top-level declarations, choose a name that describes the contents of the file, apply UpperCamelCase and append .kt extension.

Overview

-

Names of packages are always lower case and do not include underscores. Using multi-word names should be avoided. However, if used, they should be concatenated or use

lowerCamelCase. -

Names of classes and objects use

UpperCamelCase. -

Names of functions, properties, and local variables use

lowerCamelCase.

For more in-depth Kotlin Conventions, see the Kotlin Style Guide.

Examples:

//FooBar.kt

class FooBar{...}

fun fromJSONString()

package mozilla.appservices.places

Rust + Android FAQs

How do I expose Rust code to Kotlin?

Use UniFFI, which can produce Kotlin bindings for your Rust code from an interface definition file.

If UniFFI doesn’t currently meet your needs, please open an issue to discuss how the tool can be improved.

As a last resort, you can make hand-written bindings from Rust to Kotlin,

essentially manually performing the steps that UniFFI tries to automate

for you: flatten your Rust API into a bunch of pub extern "C" functions,

then use JNA to call them

from Kotlin. The details of how to do that are well beyond the scope of

this document.

How should I name the package?

Published packages should be named org.mozilla.appservices.$NAME where $NAME

is the name of your component, such as logins. The Java namespace in which

your package defines its classes etc should be mozilla.appservices.$NAME.*.

How do I publish the resulting package?

Add it to .buildconfig-android.yml in the root of this repository.

This will cause it to be automatically included as part of our release

publishing pipeline.

How do I know what library name to load to access the compiled rust code?

Assuming that you’re building the Rust code as part of the application-services

build and release process, your pub extern "C" API should always be available

from a file named libmegazord.so.

What challenges exist when calling back into Kotlin from Rust?

There are a number of them. The issue boils down to the fact that you need to be completely certain that a JVM is associated with a given thread in order to call java code on it. The difficulty is that the JVM can GC its threads and will not let rust know about it.

JNA can work around this for us to some extent, at the cost of some complexity.

The approach it takes is essentially to spawn a thread for each callback

invocation. If you are certain you’re going to do a lot of callbacks and they

all originate on the same thread, you can have them all run on a single thread

by using the CallbackThreadInitializer.

With the help of JNA’s workarounds, calling back from Rust into Kotlin isn’t too bad so long as you ensure that Kotlin cannot GC the callback while rust code holds onto it (perhaps by stashing it in a global variable), and so long as you can either accept the overhead of extra threads being instantiated on each call or are willing to manage the threads explicitly.

Note that the situation would be somewhat better if we used JNI directly (and not JNA), but this would cause us to need to generate different Rust FFI code for Android than for iOS.

Ultimately, in any case where there is an alternative to using a callback, you should probably pursue that alternative.

For example if you’re using callbacks to implement async I/O, it’s likely better to move to doing a blocking call, and have the calling code dispatch it on a background thread. It’s very easy to run such things on a background thread in Kotlin, is in line with the Android documentation on JNI usage, and in our experience is vastly simpler and less painful than using callbacks.

(Of course, not every case is solvable like this).

Why are we using JNA rather than JNI, and what tradeoffs does that involve?

We get a couple things from using JNA that we wouldn’t with JNI.

-

We are able to use the same Rust FFI code on all platforms. If we used JNI we’d need to generate an Android-specific Rust FFI crate that used the JNI APIs, and a separate Rust FFI crate for exposing to Swift.

-