Introduction

The Glean SDKs are modern cross-platform telemetry client libraries and are a part of the Glean project.

The Glean SDKs are available for several programming languages and development environments. Each SDK aims to contain the same group of features with similar, but idiomatic APIs.

To learn more about each SDK, refer to the SDKs overview page.

To get started adding Glean to your project, choose one of the following guides:

- Kotlin

- Get started adding Glean to an Android application or library.

- Swift

- Get started adding Glean to an iOS application or library.

- Python

- Get started adding Glean to any Python project.

- Rust

- Get started adding Glean to any Rust project or library.

- JavaScript

- Get started adding Glean to a website, web extension or Node.js project.

- QML

- Get started adding Glean to a Qt/QML application or library.

- Server

- Get started adding Glean to a server-side application.

For development documentation on the Glean SDK, refer to the Glean SDK development book.

Sections

User Guides

This section of the book contains step-by-step guides and essays detailing how to achieve specific tasks with each Glean SDK.

It contains guides on the first steps of integrating Glean into your project, choosing the right metric type for you, debugging products that use Glean and Glean's built-in error reporting mechanism.

If you want to start using Glean to report data, this is the section you should read.

API Reference

This section of the book contains reference pages for Glean’s user facing APIs.

If you are looking for information a specific Glean API, this is the section you should check out.

SDK Specific Information

This section contains guides and essays regarding specific usage information and possibilities in each Glean SDK.

Check out this section for more information on the SDK you are using.

Appendix

Glossary

In this book we use a lot of Glean specific terminology. In the glossary, we go through many of the terms used throughout this book and describe exactly what we mean when we use them.

Changelog

This section contains detailed notes about changes in Glean, per release.

This Week in Glean

“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.

Contribution Guidelines

This section contains detailed information on where and how to include new content to this book.

Contact

To contact the Glean team you can:

- Find us in the #glean channel on chat.mozilla.org.

- To report issues or request changes, file a bug in Bugzilla in Data Platform & Tools :: Glean: SDK.

- Send an email to glean-team@mozilla.com.

License

The Glean SDKs Source Code is subject to the terms of the Mozilla Public License v2.0. You can obtain a copy of the MPL at https://mozilla.org/MPL/2.0/.

Adding Glean to your project

This page describes the steps for adding Glean to a project. This does not include the steps for adding a new metrics or pings to an existing Glean integration. If that is what your are looking for, refer to the Adding new metrics or the Adding new pings guide.

Glean integration checklist

The Glean integration checklist can help to ensure your Glean SDK-using product is meeting all of the recommended guidelines.

Products (applications or libraries) using a Glean SDK to collect telemetry must:

-

Integrate the Glean SDK into the build system. Since the Glean SDK does some code generation for your metrics at build time, this requires a few more steps than just adding a library.

-

Go through data review process for all newly collected data.

-

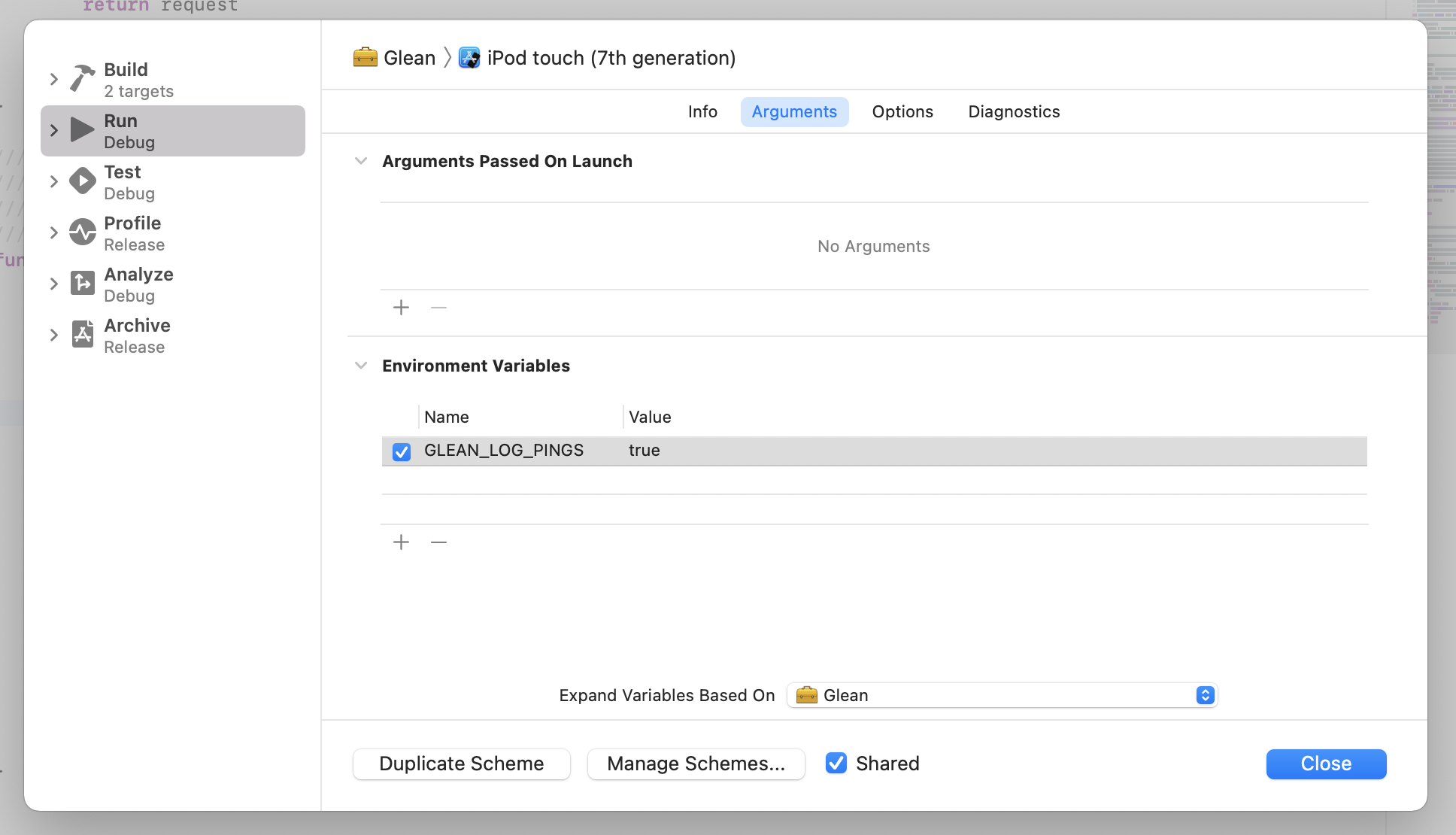

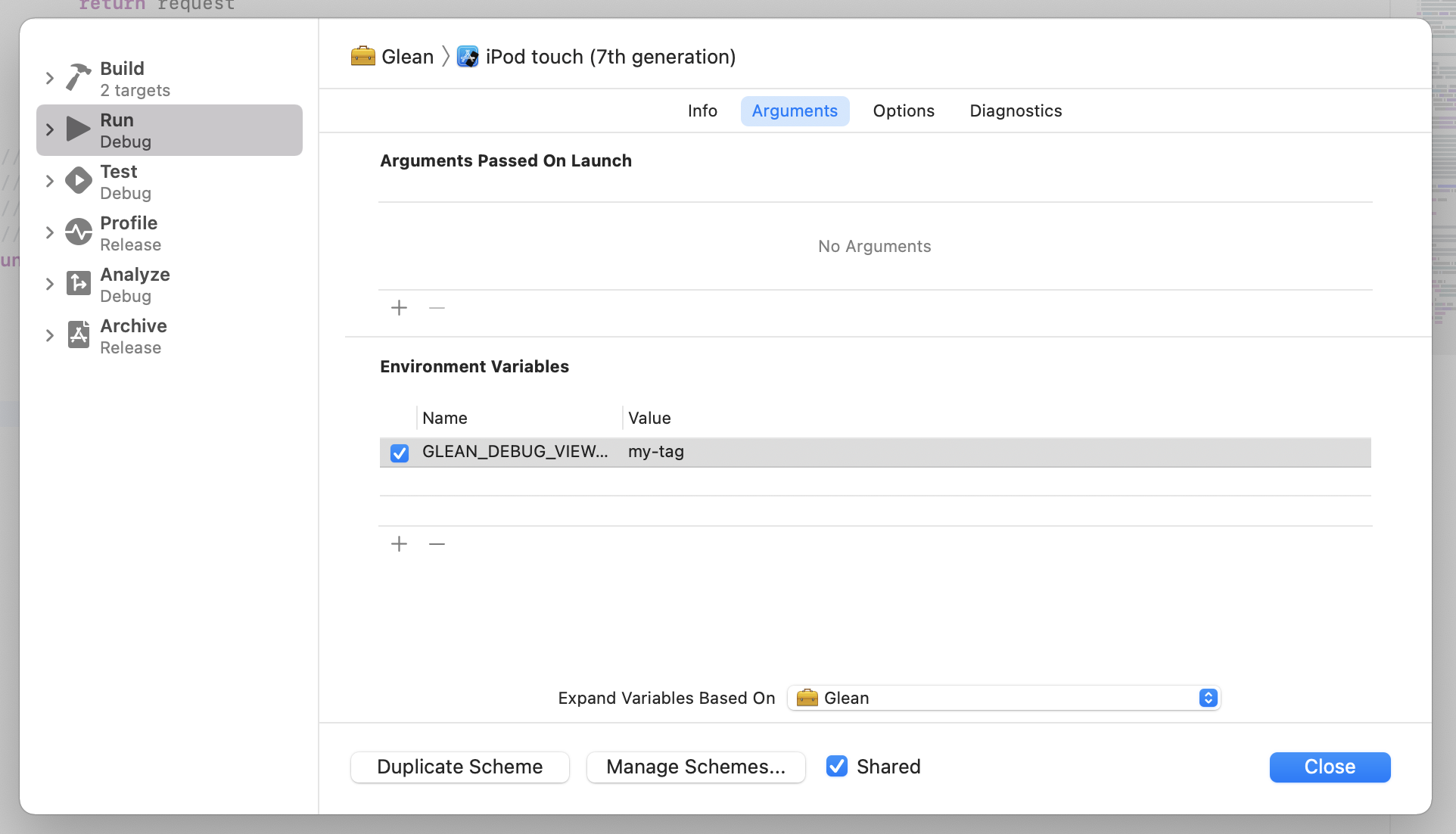

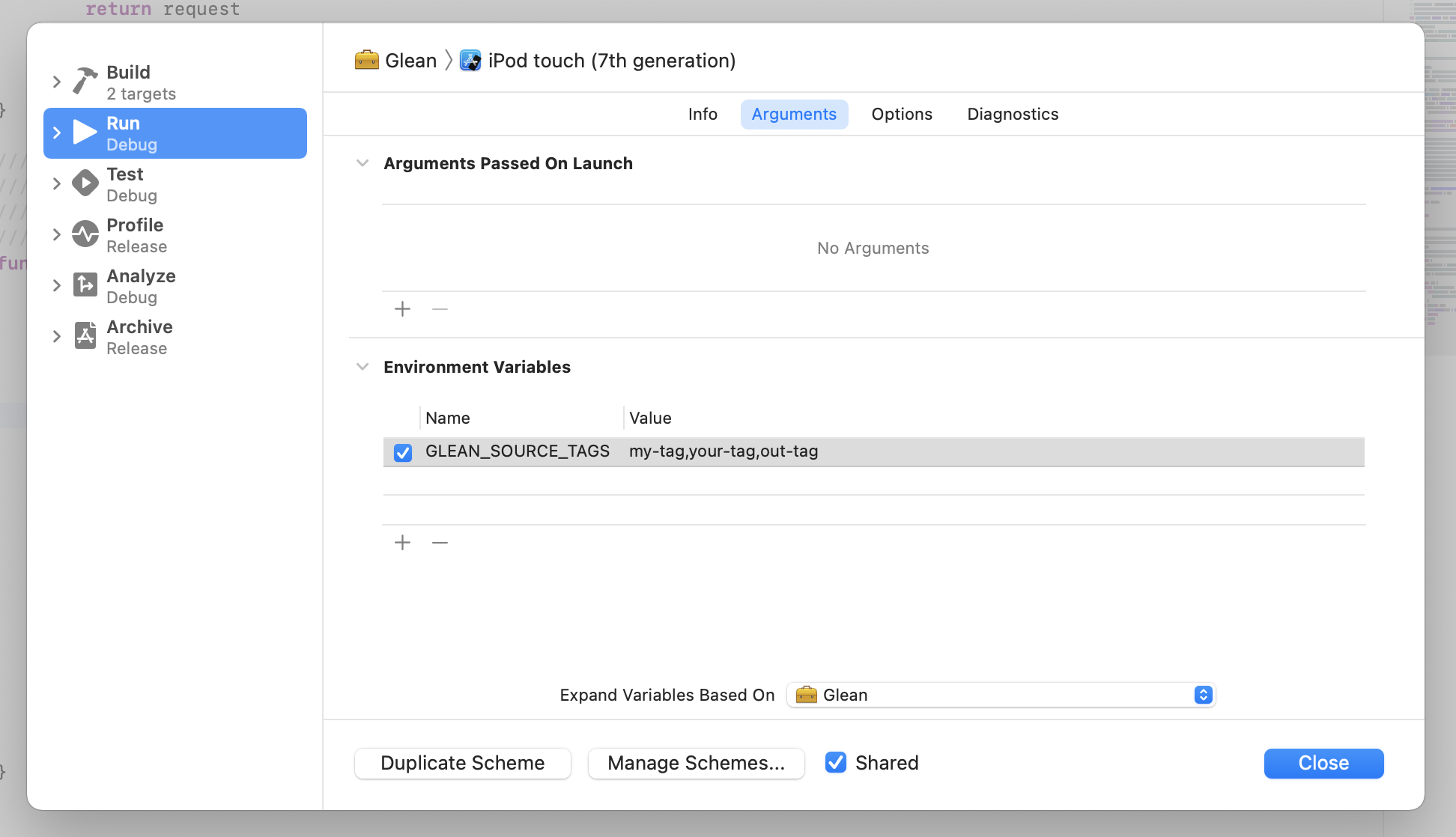

Ensure that telemetry coming from automated testing or continuous integration is either not sent to the telemetry server or tagged with the

automationtag using thesourceTagfeature. -

At least one week before releasing your product, enable your product's application id and metrics to be ingested by the data platform (and, as a consequence, indexed by the Glean Dictionary).

Important consideration for libraries: For libraries that are adding Glean, you will need to indicate which applications use the library as a dependency so that the library metrics get correctly indexed and added to the products that consume the library. If the library is added to a new product later, then it is necessary to file a new [bug][dataeng-bug] to add it as a dependency to that product in order for the library metrics to be collected along with the data from the new product.

Additionally, applications (but not libraries) must:

-

Request a data review to add Glean to your application (since it can send data out of the box).

-

Initialize Glean as early as possible at application startup.

-

Provide a way for users to turn data collection off (e.g. providing settings to control

Glean.setCollectionEnabled()). The exact method used is application-specific.

Looking for an integration guide?

Step-by-step tutorials for each supported language/platform, can be found on the specific integration guides:

Adding Glean to your Kotlin project

This page provides a step-by-step guide on how to integrate the Glean library into a Kotlin project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Currently, this SDK only supports the Android platform.

Setting up the dependency

The Glean Kotlin SDK is published on maven.mozilla.org.

To use it, you need to add the following to your project's top-level build file,

in the allprojects block (see e.g. Glean SDK's own build.gradle):

repositories {

maven {

url "https://maven.mozilla.org/maven2"

}

}

Each module that uses the Glean Kotlin SDK needs to specify it in its build file, in the dependencies block.

Add this to your Gradle configuration:

implementation "org.mozilla.telemetry:glean:{latest-version}"

Pick the correct version

The

{latest-version}placeholder in the above link should be replaced with the latest version of the Glean SDK.For example, if version 63.0.0 is the latest version, then the include directive becomes:

implementation "org.mozilla.telemetry:glean:63.0.0"

Size impact on the application APK

The Glean Kotlin SDK APK ships binary libraries for all the supported platforms. Each library file measures about 600KB. If the final APK size of the consuming project is a concern, please enable ABI splits.

Dependency for local testing

Due to its use of a native library you will need additional setup to allow local testing.

Add the following lines to your dependencies block:

testImplementation "org.mozilla.telemetry:glean-forUnitTests:${project.ext.glean_version}"

Note: Always use org.mozilla.telemetry:glean-forUnitTests.

This package is standalone and its version will be exported from the main Glean package automatically.

Setting up metrics and pings code generation

In order for the Glean Kotlin SDK to generate an API for your metrics, two Gradle plugins must be included in your build:

- The Glean Gradle plugin

- JetBrains' Python envs plugin

The Glean Gradle plugin is distributed through Mozilla's Maven, so we need to tell your build where to look for it by adding the following to the top of your build.gradle:

buildscript {

repositories {

maven {

url "https://maven.mozilla.org/maven2"

}

dependencies {

classpath "org.mozilla.telemetry:glean-gradle-plugin:{latest-version}"

}

}

}

Important

As above, the

{latest-components-version}placeholder in the above link should be replaced with the version number of the Glean SDK used in your project.

The JetBrains Python plugin is distributed in the Gradle plugin repository, so it can be included with:

plugins {

id "com.jetbrains.python.envs" version "0.0.26"

}

Right before the end of the same file, we need to apply the Glean Gradle plugin.

Set any additional parameters to control the behavior of the Glean Gradle plugin before calling apply plugin.

// Optionally, set any parameters to send to the plugin.

ext.gleanGenerateMarkdownDocs = true

apply plugin: "org.mozilla.telemetry.glean-gradle-plugin"

Rosetta 2 required on Apple Silicon

On Apple Silicon machines (M1/M2/M3 MacBooks and iMacs) Rosetta 2 is required for the bundled Python. See the Apple documentation about Rosetta 2 and Bug 1775420 for details.

You can install it withsoftwareupdate --install-rosetta

Offline builds

The Glean Gradle plugin has limited support for offline builds of applications that use the Glean SDK.

Adding Glean to your Swift project

This page provides a step-by-step guide on how to integrate the Glean library into a Swift project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Currently, this SDK only supports the iOS platform.

Requirements

- Python >= 3.9.

Setting up the dependency

The Glean Swift SDK can be consumed as a Swift Package. In your Xcode project add a new package dependency:

https://github.com/mozilla/glean-swift

Use the dependency rule "Up to Next Major Version". Xcode will automatically fetch the latest version for you.

The Glean library will be automatically available to your code when you import it:

import Glean

Setting up metrics and pings code generation

The metrics.yaml file is parsed at build time and Swift code is generated.

Add a new metrics.yaml file to your Xcode project.

Follow these steps to automatically run the parser at build time:

- Download the

sdk_generator.shscript from the Glean repository:https://raw.githubusercontent.com/mozilla/glean/{latest-release}/glean-core/ios/sdk_generator.sh

Pick the correct version

As above, the

{latest-version}placeholder should be replaced with the version number of Glean Swift SDK release used in this project.

-

Add the

sdk_generator.shfile to your Xcode project. -

On your application targets' Build Phases settings tab, click the

+icon and chooseNew Run Script Phase. Create a Run Script in which you specify your shell (ex:/bin/sh), add the following contents to the script area below the shell:bash $PWD/sdk_generator.shSet additional options to control the behavior of the script.

-

Add the path to your

metrics.yamland (optionally)pings.yamlandtags.yamlunder "Input files":$(SRCROOT)/{project-name}/metrics.yaml $(SRCROOT)/{project-name}/pings.yaml $(SRCROOT)/{project-name}/tags.yaml -

Add the path to the generated code file to the "Output Files":

$(SRCROOT)/{project-name}/Generated/Metrics.swift -

If you are using Git, add the following lines to your

.gitignorefile:.venv/ {project-name}/GeneratedThis will ignore files that are generated at build time by the

sdk_generator.shscript. They don't need to be kept in version control, as they can be re-generated from yourmetrics.yamlandpings.yamlfiles.

Glean and embedded extensions

Metric collection is a no-op in application extensions and Glean will not run. Since extensions run in a separate sandbox and process from the application, Glean would run in an extension as if it were a completely separate application with different client ids and storage. This complicates things because Glean doesn’t know or care about other processes. Because of this, Glean is purposefully prevented from running in an application extension and if metrics need to be collected from extensions, it's up to the integrating application to pass the information to the base application to record in Glean.

Adding Glean to your Python project

This page provides a step-by-step guide on how to integrate the Glean library into a Python project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Setting up the dependency

We recommend using a virtual environment for your work to isolate the dependencies for your project. There are many popular abstractions on top of virtual environments in the Python ecosystem which can help manage your project dependencies.

The Glean Python SDK currently has prebuilt wheels on PyPI for Windows (i686 and x86_64), Linux/glibc (x86_64) and macOS (x86_64).

For other platforms, including BSD or Linux distributions that don't use glibc, such as Alpine Linux, the glean_sdk package will be built from source on your machine.

This requires that Cargo and Rust are already installed.

The easiest way to do this is through rustup.

Once you have your virtual environment set up and activated, you can install the Glean Python SDK into it using:

$ python -m pip install glean_sdk

Important

Installing Python wheels is still a rapidly evolving feature of the Python package ecosystem. If the above command fails, try upgrading

pip:python -m pip install --upgrade pip

Important

The Glean Python SDK make extensive use of type annotations to catch type related errors at build time. We highly recommend adding mypy to your continuous integration workflow to catch errors related to type mismatches early.

Consuming YAML registry files

For Python, the metrics.yaml file must be available and loaded at runtime.

If your project is a script (i.e. just Python files in a directory), you can load the metrics.yaml using:

from glean import load_metrics

metrics = load_metrics("metrics.yaml")

# Use a metric on the returned object

metrics.your_category.your_metric.set("value")

If your project is a distributable Python package, you need to include the metrics.yaml file using one of the myriad ways to include data in a Python package and then use pkg_resources.resource_filename() to get the filename at runtime.

from glean import load_metrics

from pkg_resources import resource_filename

metrics = load_metrics(resource_filename(__name__, "metrics.yaml"))

# Use a metric on the returned object

metrics.your_category.your_metric.set("value")

Automation steps

Documentation

The documentation for your application or library's metrics and pings are written in metrics.yaml and pings.yaml.

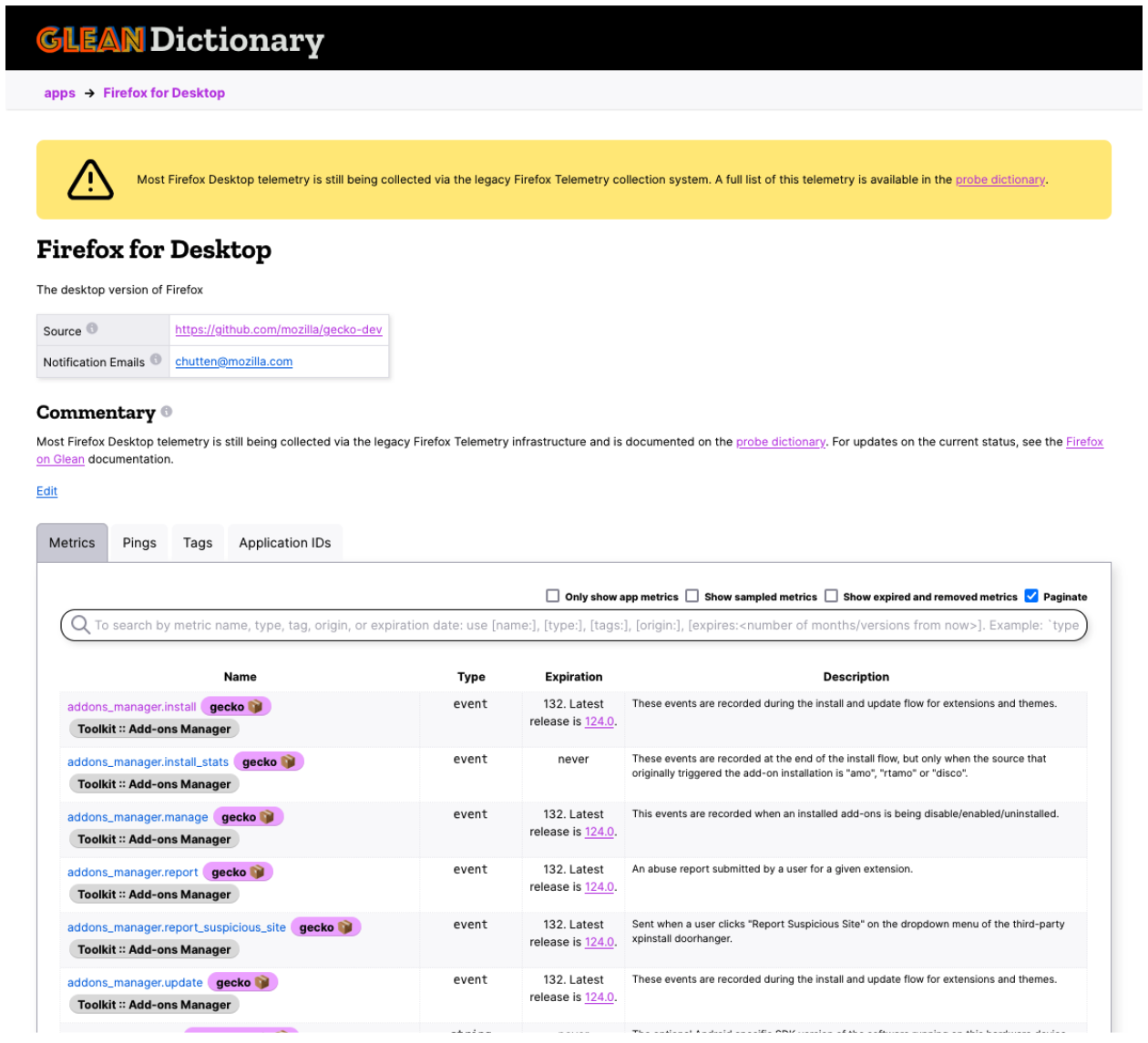

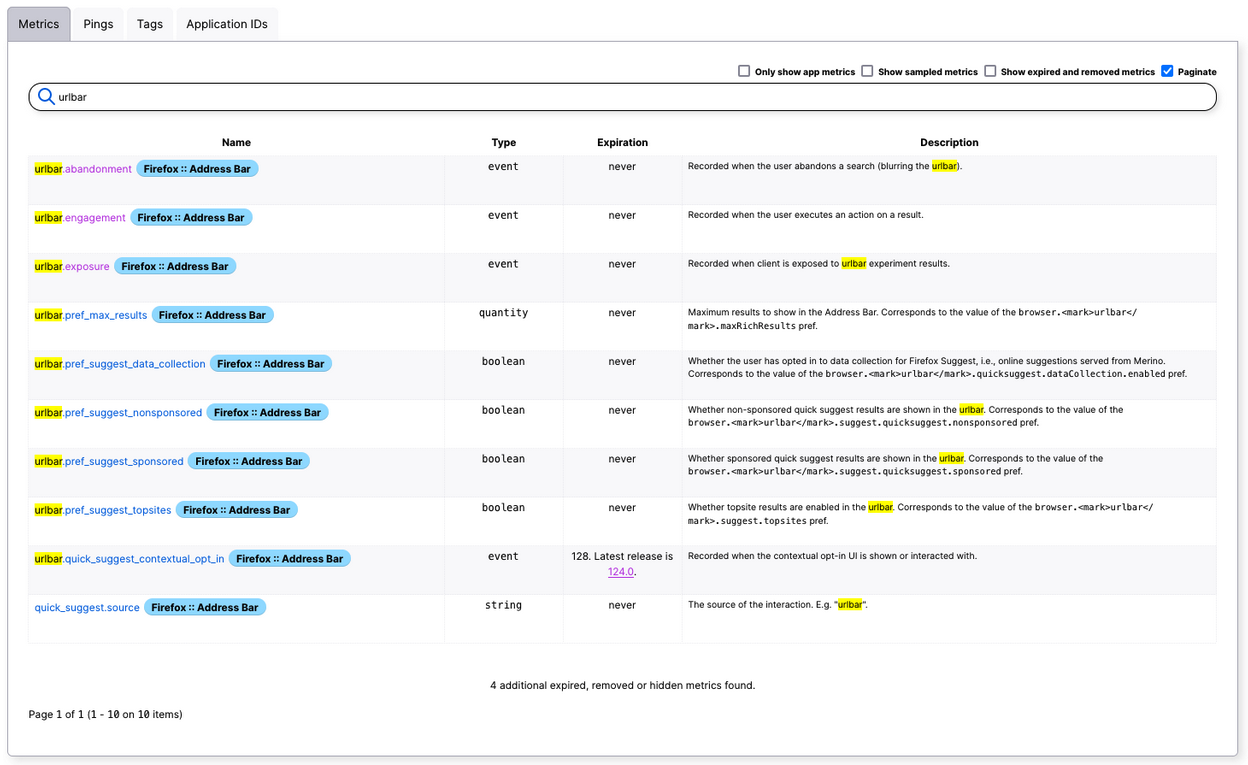

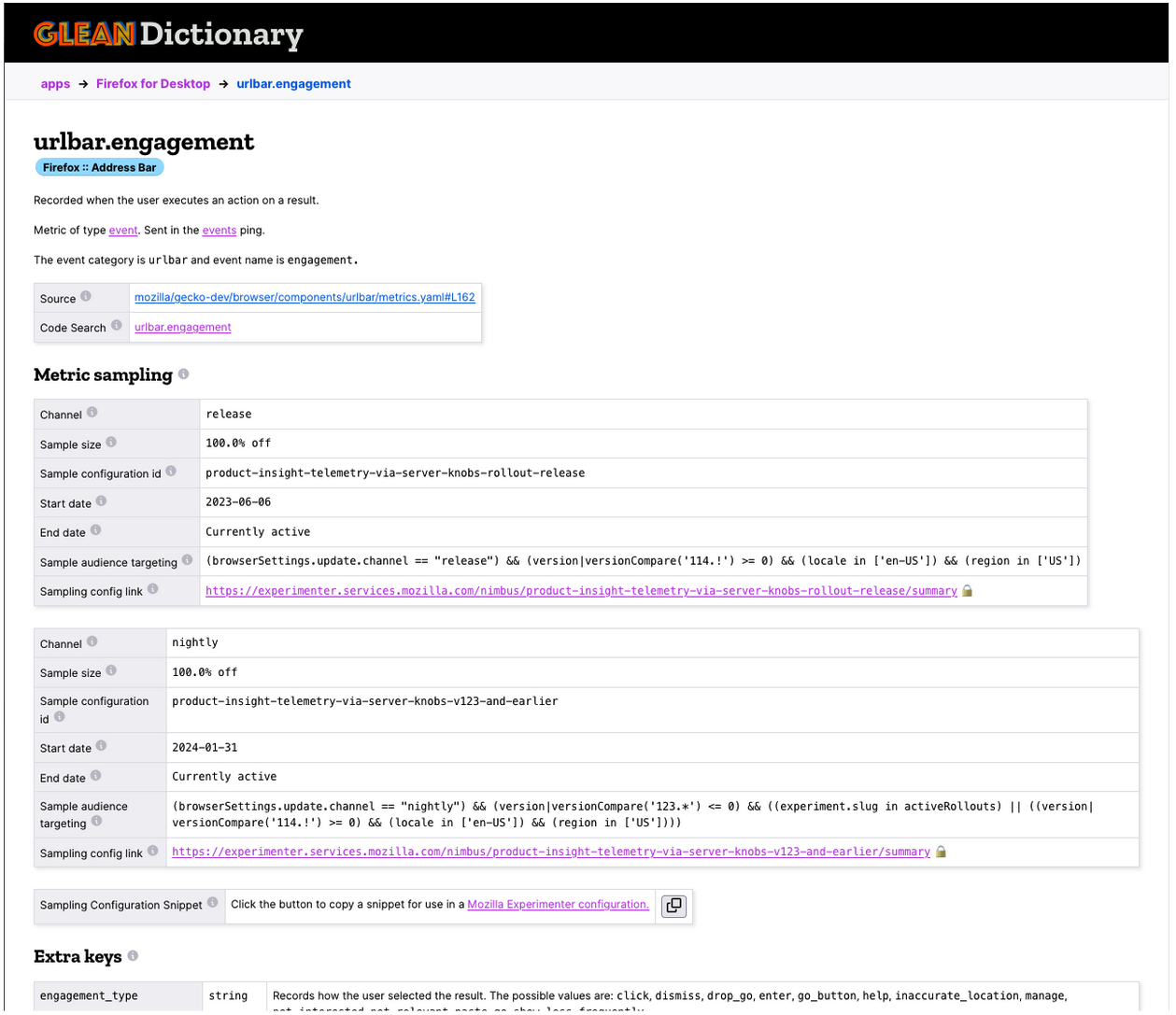

For Mozilla projects, this SDK documentation is automatically published on the Glean Dictionary. For non-Mozilla products, it is recommended to generate markdown-based documentation of your metrics and pings into the repository. For most languages and platforms, this transformation can be done automatically as part of the build. However, for some SDKs the integration to automatically generate docs is an additional step.

The Glean Python SDK provides a commandline tool for automatically generating markdown documentation from your metrics.yaml and pings.yaml files. To perform that translation, run glean_parser's translate command:

python3 -m glean_parser translate -f markdown -o docs metrics.yaml pings.yaml

To get more help about the commandline options:

python3 -m glean_parser translate --help

We recommend integrating this step into your project's documentation build. The details of that integration is left to you, since it depends on the documentation tool being used and how your project is set up.

Metrics linting

Glean includes a "linter" for metrics.yaml and pings.yaml files called the glinter that catches a number of common mistakes in these files.

As part of your continuous integration, you should run the following on your metrics.yaml and pings.yaml files:

python3 -m glean_parser glinter metrics.yaml pings.yaml

Parallelism

Most Glean SDKs use a separate worker thread to do most of its work, including any I/O. This thread is fully managed by the SDK as an implementation detail. Therefore, users should feel free to use the Glean SDKs wherever they are most convenient, without worrying about the performance impact of updating metrics and sending pings.

Since the Glean SDKs perform disk and networking I/O, they try to do as much of their work as possible on separate threads and processes. Since there are complex trade-offs and corner cases to support Python parallelism, it is hard to design a one-size-fits-all approach.

Default behavior

When using the Python SDK, most of the Glean's work is done on a separate thread, managed by the SDK itself. The SDK releases the Global Interpreter Lock (GIL) for most of its operations, therefore your application's threads should not be in contention with the Glean's worker thread.

The Glean Python SDK installs an atexit handler so that its worker thread can cleanly finish when your application exits.

This handler will wait up to 30 seconds for any pending work to complete.

By default, ping uploading is performed in a separate child process. This process will continue to upload any pending pings even after the main process shuts down. This is important for commandline tools where you want to return control to the shell as soon as possible and not be delayed by network connectivity.

Cases where subprocesses aren't possible

The default approach may not work with applications built using PyInstaller or similar tools which bundle an application together with a Python interpreter making it impossible to spawn new subprocesses of that interpreter. For these cases, there is an option to ensure that ping uploading occurs in the main process. To do this, set the allow_multiprocessing parameter on the glean.Configuration object to False.

Using the multiprocessing module

Additionally, the default approach does not work if your application uses the multiprocessing module for parallelism.

The Glean Python SDK can not wait to finish its work in a multiprocessing subprocess, since atexit handlers are not supported in that context.

Therefore, if the Glean Python SDK detects that it is running in a multiprocessing subprocess, all of its work that would normally run on a worker thread will run on the main thread.

In practice, this should not be a performance issue: since the work is already in a subprocess, it will not block the main process of your application.

Adding Glean to your Rust project

This page provides a step-by-step guide on how to integrate the Glean library into a Rust project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Setting up the dependency

The Glean Rust SDK is published on crates.io.

Add it to your dependencies in Cargo.toml:

[dependencies]

glean = "50.0.0"

Setting up metrics and pings code generation

glean-build is in beta.

The

glean-buildcrate is new and currently in beta. It can be used as a git dependency. Please file a bug if it does not work for you.

At build time you need to generate the metrics and ping API from your definition files.

Add the glean-build crate as a build dependency in your Cargo.toml:

[build-dependencies]

glean-build = { git = "https://github.com/mozilla/glean" }

Then add a build.rs file next to your Cargo.toml and call the builder:

use glean_build::Builder;

fn main() {

Builder::default()

.file("metrics.yaml")

.file("pings.yaml")

.generate()

.expect("Error generating Glean Rust bindings");

}

Ensure your metrics.yaml and pings.yaml files are placed next to your Cargo.toml or adjust the path in the code above.

You can also leave out any of the files.

Include the generated code

glean-build will generate a glean_metrics.rs file that needs to be included in your source code.

To do so add the following lines of code in your src/lib.rs file:

mod metrics {

include!(concat!(env!("OUT_DIR"), "/glean_metrics.rs"));

}

Alternatively create src/metrics.rs (or a different name) with only the include line:

include!(concat!(env!("OUT_DIR"), "/glean_metrics.rs"));

Then add mod metrics; to your src/lib.rs file.

Use the metrics

In your code you can then access generated metrics nested within their category under the metrics module (or your chosen name):

metrics::your_category::metric_name.set(true);

See the metric API reference for details.

Adding Glean to your JavaScript project

This page provides a step-by-step guide on how to integrate the Glean JavaScript SDK into a JavaScript project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

The Glean JavaScript SDK allows integration with three distinct JavaScript environments: websites, web extension and Node.js.

Requirements

- Node.js >= 12.20.0

- npm >= 7.0.0

- Webpack >= 5.34.0

- Python >= 3.9

- The

gleancommand requires Python to downloadglean_parserwhich is a Python library

- The

Browser extension specific requirements

- webextension-polyfill >= 0.8.0

- Glean.js assumes a Promise-based

browserAPI: Firefox provides such an API by default. Other browsers may require using a polyfill library such uswebextension-polyfillwhen using Glean in browser extensions

- Glean.js assumes a Promise-based

- Host permissions to the telemetry server

- Only necessary if the defined server endpoint denies cross-origin requests

- Not necessary if using the default

https://incoming.telemetry.mozilla.org.

- "storage" API permissions

Browser extension example configuration

The

manifest.jsonfile of the sample browser extension available on themozilla/glean.jsrepository provides an example on how to define the above permissions as well as how and where to load thewebextension-polyfillscript.

Setting up the dependency

The Glean JavaScript SDK is distributed as an npm package

@mozilla/glean.

This package has different entry points to access the different SDKs.

@mozilla/glean/webgives access to the websites SDK@mozilla/glean/webextgives access to the web extension SDK@mozilla/glean/nodegives access to the Node.js SDK1

The Node.js SDK does not have persistent storage yet. This means, Glean does not persist state throughout application runs. For updates on the implementation of this feature in Node.js, follow Bug 1728807.

Install Glean in your JavaScript project, by running:

npm install @mozilla/glean

Then import Glean into your project:

// Importing the Glean JavaScript SDK for use in **web extensions**

//

// esm

import Glean from "@mozilla/glean/webext";

// cjs

const { default: Glean } = require("@mozilla/glean/webext");

// Importing the Glean JavaScript SDK for use in **websites**

//

// esm

import Glean from "@mozilla/glean/web";

// cjs

const { default: Glean } = require("@mozilla/glean/web");

// Importing the Glean JavaScript SDK for use in **Node.js**

//

// esm

import Glean from "@mozilla/glean/node";

// cjs

const { default: Glean } = require("@mozilla/glean/node");

Browser extension security considerations

In case of privilege-escalation attack into the context of the web extension using Glean, the malicious scripts would be able to call Glean APIs or use the

browser.storage.localAPIs directly. That would be a risk to Glean data, but not caused by Glean. Glean-using extensions should be careful not to relax the default Content-Security-Policy that generally prevents these attacks.

Common import errors

"Cannot find module '@mozilla/glean'"

Glean.js does not have a main package entry point.

Instead it relies on a series of entry points depending on the platform you are targeting.

In order to import Glean use:

import Glean from '@mozilla/glean/{your-platform}'

"Module not found: Error: Can't resolve '@mozilla/glean/webext' in '...'"

Glean.js relies on Node.js' subpath exports feature to define multiple package entry points.

Please make sure that you are using a supported Node.js runtime and also make sure the tools you are using support this Node.js feature.

Setting up metrics and pings code generation

In JavaScript, the metrics and pings definitions must be parsed at build time.

The @mozilla/glean package exposes glean_parser through the glean script.

To parse your YAML registry files using this script, define a new script in your package.json file:

{

// ...

"scripts": {

// ...

"build:glean": "glean translate path/to/metrics.yaml path/to/pings.yaml -f javascript -o path/to/generated",

// Or, if you are building for a Typescript project

"build:glean": "glean translate path/to/metrics.yaml path/to/pings.yaml -f typescript -o path/to/generated"

}

}

Then run this script by calling:

npm run build:glean

Automation steps

Documentation

Prefer using the Glean Dictionary

While it is still possible to generate Markdown documentation, if working on a public Mozilla project rely on the Glean Dictionary for documentation. Your product will be automatically indexed by the Glean Dictionary after it gets enabled in the pipeline.

One of the commands provided by glean_parser allows users to generate Markdown documentation based on the contents of their YAML registry files.

To perform that translation, use the translate command with a different output format, as shown below.

In your package.json, define the following script:

{

// ...

"scripts": {

// ...

"docs:glean": "glean translate path/to/metrics.yaml path/to/pings.yaml -f markdown -o path/to/docs",

}

}

Then run this script by calling:

npm run docs:glean

YAML registry files linting

Glean includes a "linter" for the YAML registry files called the glinter that catches a number of common mistakes in these files.

To run the linter use the glinter command.

In your package.json, define the following script:

{

// ...

"scripts": {

// ...

"lint:glean": "glean glinter path/to/metrics.yaml path/to/pings.yaml",

}

}

Then run this script by calling:

npm run lint:glean

Adding Glean to your Qt/QML project

This page provides a step-by-step guide on how to integrate the Glean.js library into a Qt/QML project.

Nevertheless this is just one of the required steps for integrating Glean successfully into a project. Check you the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Requirements

- Python >= 3.7

- Qt >= 5.15.2

Setting up the dependency

Glean.js' Qt/QML build is distributed as an asset with every Glean.js release. In order to download the latest version visit https://github.com/mozilla/glean.js/releases/latest.

Glean.js is a QML module, so extract the contents of the downloaded file wherever you keep your other modules. Make sure that whichever directory that module is placed in, is part of the QML Import Path.

After doing that, import Glean like so:

import org.mozilla.Glean <version>

Picking the correct version

The

<version>number is the version of the release you downloaded minus its patch version. For example, if you downloaded Glean.js version0.15.0your import statement will be:import org.mozilla.Glean 0.15

Consuming YAML registry files

Qt/QML projects need to setup metrics and pings code generation manually.

First install the glean_parser CLI tool.

pip install glean_parser

Make sure you have the correct glean_parser version!

Qt/QML support was added to

glean_parserin version 3.5.0.

Then call glean_parser from the command line:

glean_parser translate path/to/metrics.yaml path/to/pings.yaml \

-f javascript \

-o path/to/generated/files \

--option platform=qt \

--option version=0.15

The translate command will takes a list of YAML registry file paths and an output path and parse

the given YAML registry files into QML JavaScript files.

The generated folder will be a QML module. Make sure wherever the generated module is placed is also part of the QML Import Path.

Notice that when building for Qt/QML it is mandatory to give the translate command two extra options.

--option platform=qt

This option is what changes the output file from standard JavaScript to QML JavaScript.

--option version=<version>

The version passed to this option will be the version of the generated QML module.

Automation steps

Documentation

Prefer using the Glean Dictionary

While it is still possible to generate Markdown documentation, if working on a public Mozilla project rely on the Glean Dictionary for documentation. Your product will be automatically indexed by the Glean Dictionary after it gets enabled in the pipeline.

One of the commands provided by glean_parser allows users to generate Markdown documentation based

on the contents of their YAML registry files. To perform that translation, use the translate command

with a different output format, as shown below.

glean_parser translate path/to/metrics.yaml path/to/pings.yaml \

-f markdown \

-o path/to/docs

YAML registry files linting

glean_parser includes a "linter" for the YAML registry files called the glinter that catches a

number of common mistakes in these files. To run the linter use the glinter command.

glean_parser glinter path/to/metrics.yaml path/to/pings.yaml

Debugging

By default, the Glean.js QML module uses a minified version of the Glean.js library. It may be useful to use the unminified version of the library in order to get proper line numbers and function names when debugging crashes.

The bundle provided contains the unminified version of the library.

In order to use it, open the glean.js file inside the included module and change the line:

.import "glean.lib.js" as Glean

to

.import "glean.dev.js" as Glean

Troubleshooting

submitPing may cause crashes when debugging iOS devices

The submitPing function hits a

known bug in the Qt JavaScript interpreter.

This bug is only reproduced in iOS devices, it does not happen in emulators. It also only happens when using the Qt debug library for iOS.

There is no way around this bug other than avoiding the Qt debug library for iOS altogether until it is fixed. Refer to the the Qt debugging documentation on how to do that.

Adding Glean to your Server Application

Glean enables the collection of behavioral metrics through events in server environments. This method does not rely on the Glean SDK but utilizes the Glean parser to generate native code for logging events in a standard format compatible with the ingestion pipeline.

Differences from using the Glean SDK

This implementation of telemetry collection in server environments has some differences compared to using Glean SDK in client applications and Glean.js in the frontend of web applications. Primarily, in server environments the focus is exclusively on event-based metrics, diverging from the broader range of metric types supported by Glean. Additionally, there is no need to incorporate Glean SDK as a dependency in server applications. Instead, the Glean parser is used to generate native code for logging events.

When to use server-side collection

This method is intended for collecting user-level behavioral events in server environments. It is not suitable for collecting system-level metrics or performance data, which should be collected using cloud monitoring tools.

How to add Glean server side collection to your service

- Integrate

glean_parserinto your build system. Follow instructions for other SDK-enabled platforms, e.g. JavaScript. Use a server outputter to generate logging code.glean_parsercurrently supports Go, JavaScript/Typescript, Python, and Ruby. - Define your metrics in

metrics.yaml - Request a data review for the collected data

- Add your product to probe-scraper

How to add a new event to your server side collection

Follow the standard Glean SDK guide for adding metrics to metrics.yaml file.

Technical details - ingestion

For more technical details on how ingestion works, see the Confluence page.

Enabling data to be ingested by the data platform

This page provides a step-by-step guide on how to enable data from your product to be ingested by the data platform.

This is just one of the required steps for integrating Glean successfully into a product. Check the full Glean integration checklist for a comprehensive list of all the steps involved in doing so.

Requirements

- GitHub Workflows

Add your product to probe scraper

At least one week before releasing your product, file a data engineering bug to enable your product's application id.

This will result in your product being added to probe scraper's

repositories.yaml.

Validate and publish metrics

After your product has been enabled, you must submit commits to probe scraper to validate and publish metrics.

Metrics will only be published from branches defined in probe scraper's repositories.yaml, or the Git default branch if not explicitly configured.

This should happen on every CI run to the specified branches.

Nightly jobs will then automatically add published metrics to the Glean Dictionary and other data platform tools.

Enable the GitHub Workflow by creating a new file .github/workflows/glean-probe-scraper.yml with the following content:

---

name: Glean probe-scraper

on: [push, pull_request]

jobs:

glean-probe-scraper:

uses: mozilla/probe-scraper/.github/workflows/glean.yaml@main

Add your library to probe scraper

At least one week before releasing your product, file a data engineering bug to add your library to probe scraper

and be scraped for metrics as a dependency of another product.

This will result in your library being added to probe scraper's

repositories.yaml.

Integrating Glean for product managers

This chapter provides guidance for planning the work involved in integrating Glean into your product, for internal Mozilla customers. For a technical coding perspective, see adding Glean to your project.

Glean is the standard telemetry platform required for all new Mozilla products. While there are some upfront costs to integrating Glean in your product, this pays off in easier long-term maintenance and a rich set of self-serve analysis tools. The Glean team is happy to support your telemetry integration and make it successful. Find us in #glean or email glean-team@mozilla.com.

Building a telemetry plan

The Glean SDKs provide support for answering basic product questions out-of-the-box, such as daily active users, product version and platform information.

However, it is also a good idea to have a sense of any additional product-specific questions you are trying to answer with telemetry, and, when possible, in collaboration with a data scientist.

This of course helps for your own planning, but is also invaluable for the Glean team to support you, since we will understand the ultimate goals of your product's telemetry and ensure the design will meet those goals and we can identify any new features that may be required.

It is best to frame this document in the form of questions and use cases rather than as specific data points and schemas.

Integrating Glean into your product

The technical steps for integrating Glean in your product are documented in its own chapter for supported platforms. We recommend having a member of the Glean team review this integration to catch any potential pitfalls.

(Optional) Adapting Glean to your platform

The Glean SDKs are a collection of cross platform libraries and tools that facilitate collection of Glean conforming telemetry from applications.

Consult the list of the currently supported platforms and languages.

If your product's tech stack isn't currently supported, please reach out to the Glean team: significant work will be required to create a new integration.

In previous efforts, this has ranged from 1 to 3 months FTE of work, so it is important to plan for this work well in advance.

While the first phase of this work generally requires the specialized expertise of the Glean team, the second half can benefit from outside developers to move faster.

(Optional) Designing ping submission

The Glean SDKs periodically send telemetry to our servers in a bundle known as a "ping".

For mobile applications with common interaction models, such as web browsers, the Glean SDKs provide basic pings out-of-the-box.

For other kinds of products, it may be necessary to carefully design what triggers the submission of a ping.

It is important to have a solid telemetry plan (see above) so we can make sure the ping submission will be able to answer the telemetry questions required of the product.

(Optional) New metric types

The Glean SDKs have a number of different metric types that it can collect.

Metric types provide "guardrails" to make sure that telemetry is being collected correctly, and to present the data at analysis time more automatically.

Occasionally, products need to collect data that doesn't fit neatly into one of the available metric types.

Glean has a process to request and introduce more metric types and we will work with you to design something appropriate.

This design and implementation work is at least 4 weeks, though we are working on the foundation to accelerate that.

Having a telemetry plan (see above) will help to identify this work early.

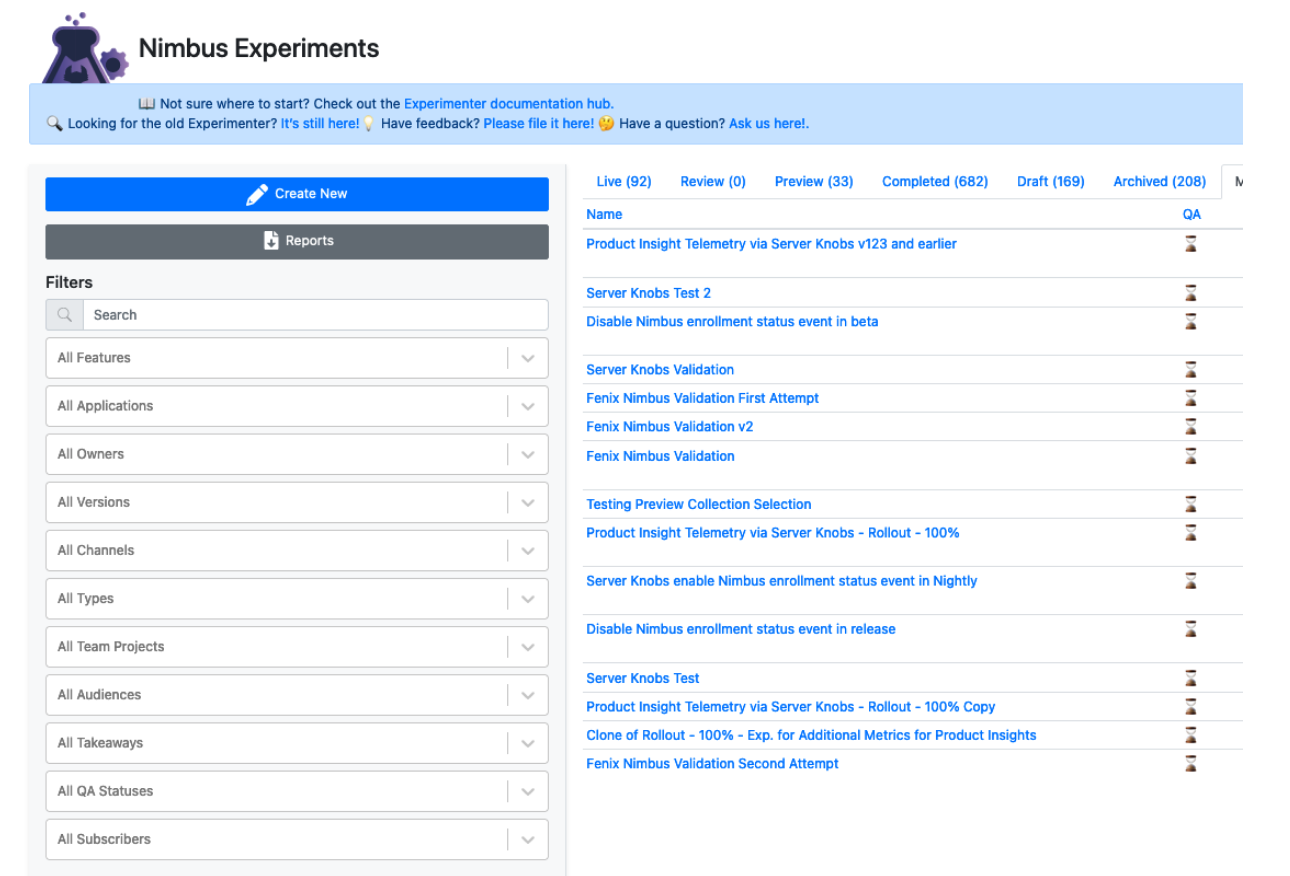

Integrating Glean into GLAM

To use GLAM for analysis of your application's data file a ticket in the GLAM repository.

A data engineer from the GLAM team will reach out to you if further information is required.

Metrics

In this chapter we describe how to define and use the Glean SDK's metrics.

Adding new metrics

Table of Contents

- Process overview

- Choosing a metric type

- For how long do you need to collect this data?

- When should the Glean SDK automatically clear the measurement?

- What should this new metric be called?

- What if none of these metric types is the right fit?

- How do I make sure my metric is working?

- Adding the metric to the

metrics.yamlfile - Using the metric from your code

Process overview

When adding a new metric, the process is:

- Consider the question you are trying to answer with this data, and choose the metric type and parameters to use.

- Add a new entry to

metrics.yaml. - Add code to your project to record into the metric by calling the Glean SDK.

Important: Any new data collection requires documentation and data-review. This is also required for any new metric automatically collected by the Glean SDK.

Choosing a metric type

The following is a set of questions to ask about the data being collected to help better determine which metric type to use.

Is it a single measurement?

If the value is true or false, use a boolean metric.

If the value is a string, use a string metric. For example, to record the name of the default search engine.

Beware: string metrics are exceedingly general, and you are probably best served by selecting the most specific metric for the job, since you'll get better error checking and richer analysis tools for free. For example, avoid storing a number in a string metric --- you probably want a counter metric instead.

If you need to store multiple string values in a metric, use a string list metric. For example, you may want to record the list of other Mozilla products installed on the device.

For all of the metric types in this section that measure single values, it is especially important to consider how the lifetime of the value relates to the ping it is being sent in. Since these metrics don't perform any aggregation on the client side, when a ping containing the metric is submitted, it will contain only the "last known" value for the metric, potentially resulting in data loss. There is further discussion of metric lifetimes below.

Are you measuring user behavior?

For tracking user behavior, it is usually meaningful to know the over of events that lead to the use of a feature. Therefore, for user behavior, an event metric is usually the best choice.

Be aware, however, that events can be particularly expensive to transmit, store and analyze, so should not be used for higher-frequency measurements - though this is less of a concern in server environments.

Are you counting things?

If you want to know how many times something happened, use a counter metric. If you are counting a group of related things, or you don't know what all of the things to count are at build time, use a labeled counter metric.

If you need to know how many times something happened relative to the number of times something else happened, use a rate metric.

If you need to know when the things being counted happened relative to other things, consider using an event.

Are you measuring time?

If you need to record an absolute time, use a datetime metric. Datetimes are recorded in the user's local time, according to their device's real time clock, along with a timezone offset from UTC. Datetime metrics allow specifying the resolution they are collected at, and to stay lean, they should only be collected at the minimum resolution required to answer your question.

If you need to record how long something takes you have a few options.

If you need to measure the total time spent doing a particular task, look to the timespan metric. Timespan metrics allow specifying the resolution they are collected at, and to stay lean, they should only be collected at the minimum resolution required to answer your question. Note that this metric should only be used to measure time on a single thread. If multiple overlapping timespans are measured for the same metric, an invalid state error is recorded.

If you need to measure the relative occurrences of many timings, use a timing distribution. It builds a histogram of timing measurements, and is safe to record multiple concurrent timespans on different threads.

If you need to know the time between multiple distinct actions that aren't a simple "begin" and "end" pair, consider using an event.

For how long do you need to collect this data?

Think carefully about how long the metric will be needed, and set the expires parameter to disable the metric at the earliest possible time.

This is an important component of Mozilla's lean data practices.

When the metric passes its expiration date (determined at build time), it will automatically stop collecting data.

When a metric's expiration is within in 14 days, emails will be sent from telemetry-alerts@mozilla.com to the notification_emails addresses associated with the metric.

At that time, the metric should be removed, which involves removing it from the metrics.yaml file and removing uses of it in the source code.

Removing a metric does not affect the availability of data already collected by the pipeline.

If the metric is still needed after its expiration date, it should go back for another round of data review to have its expiration date extended.

Important: Ensure that telemetry alerts are received and are reviewed in a timely manner. Expired metrics don't record any data, extending or removing a metric should be done in time. Consider adding both a group email address and an individual who is responsible for this metric to the

notification_emailslist.

When should the Glean SDK automatically clear the measurement?

The lifetime parameter of a metric defines when its value will be cleared. There are three lifetime options available:

ping (default)

The metric is cleared each time it is submitted in the ping. This is the most common case, and should be used for metrics that are highly dynamic, such as things computed in response to the user's interaction with the application.

application

The metric is related to an application run, and is cleared after the application restarts and any Glean-owned ping, due at startup, is submitted. This should be used for things that are constant during the run of an application, such as the operating system version. In practice, these metrics are generally set during application startup. A common mistake--- using the ping lifetime for these type of metrics---means that they will only be included in the first ping sent during a particular run of the application.

user

NOTE: Reach out to the Glean team before using this.

The metric is part of the user's profile and will live as long as the profile lives.

This is often not the best choice unless the metric records a value that really needs

to be persisted for the full lifetime of the user profile, e.g. an identifier like the client_id,

the day the product was first executed. It is rare to use this lifetime outside of some metrics

that are built in to the Glean SDK.

While lifetimes are important to understand for all metric types, they are particularly important for the metric types that record single values and don't aggregate on the client (boolean, string, labeled_string, string_list, datetime and uuid), since these metrics will send the "last known" value and missing the earlier values could be a form of unintended data loss.

A lifetime example

Let's work through an example to see how these lifetimes play out in practice. Let's suppose we have a user preference, "turbo mode", which defaults to false, but the user can turn it to true at any time. We want to know when this flag is true so we can measure its affect on other metrics in the same ping. In the following diagram, we look at a time period that sends 4 pings across two separate runs of the application. We assume here, that like the Glean SDK's built-in metrics ping, the developer writing the metric isn't in control of when the ping is submitted.

In this diagram, the ping measurement windows are represented as rectangles, but the moment the ping is "submitted" is represented by its right edge. The user changes the "turbo mode" setting from false to true in the first run, and then toggles it again twice in the second run.

-

A. Ping lifetime, set on change: The value isn't included in Ping 1, because Glean doesn't know about it yet. It is included in the first ping after being recorded (Ping 2), which causes it to be cleared.

-

B. Ping lifetime, set on init and change: The default value is included in Ping 1, and the changed value is included in Ping 2, which causes it to be cleared. It therefore misses Ping 3, but when the application is started, it is recorded again and it is included in Ping 4. However, this causes it to be cleared again and it is not in Ping 5.

-

C. Application lifetime, set on change: The value isn't included in Ping 1, because Glean doesn't know about it yet. After the value is changed, it is included in Pings 2 and 3, but then due to application restart it is cleared, so it is not included until the value is manually toggled again.

-

D. Application, set on init and change: The default value is included in Ping 1, and the changed value is included in Pings 2 and 3. Even though the application startup causes it to be cleared, it is set again, and all subsequent pings also have the value.

-

E. User, set on change: The default value is missing from Ping 1, but since

userlifetime metrics aren't cleared unless the user profile is reset (e.g. on Android, when the product is uninstalled), it is included in all subsequent pings. -

F. User, set on init and change: Since

userlifetime metrics aren't cleared unless the user profile is reset, it is included in all subsequent pings. This would be true even if the "turbo mode" preference were never changed again.

Note that for all of the metric configurations, the toggle of the preference off and on during Ping 4 is completely missed. If you need to create a ping containing one, and only one, value for this metric, consider using a custom ping to create a ping whose lifetime matches the lifetime of the value.

What if none of these lifetimes are appropriate?

If the timing at which the metric is sent in the ping needs to closely match the timing of the metrics value, the best option is to use a custom ping to manually control when pings are sent.

This is especially useful when metrics need to be tightly related to one another, for example when you need to measure the distribution of frame paint times when a particular rendering backend is in use. If these metrics were in different pings, with different measurement windows, it is much harder to do that kind of reasoning with much certainty.

What should this new metric be called?

Metric names have a maximum length of 70 characters.

Reuse names from other applications

There's a lot of value using the same name for analogous metrics collected across different products. For example, BigQuery makes it simple to join columns with the same name across multiple tables. Therefore, we encourage you to investigate if a similar metric is already being collected by another product. If it is, there may be an opportunity for code reuse across these products, and if all the projects are using the Glean SDK, it's easy for libraries to send their own metrics. If sharing the code doesn't make sense, at a minimum we recommend using the same metric name for similar actions and concepts whenever possible.

Make names unique within an application

Metric identifiers (the combination of a metric's category and name) must be unique across all metrics that are sent by a single application.

This includes not only the metrics defined in the app's metrics.yaml, but the metrics.yaml of any Glean SDK-using library that the application uses, including the Glean SDK itself.

Therefore, care should be taken to name things specifically enough so as to avoid namespace collisions.

In practice, this generally involves thinking carefully about the category of the metric, more than the name.

Note: Duplicate metric identifiers are not currently detected at build time. See bug 1578383 for progress on that. However, the probe_scraper process, which runs nightly, will detect duplicate metrics and e-mail the

notification_emailsassociated with the given metrics.

Be as specific as possible

More broadly, you should choose the names of metrics to be as specific as possible. It is not necessary to put the type of the metric in the category or name, since this information is retained in other ways through the entire end-to-end system.

For example, if defining a set of events related to search, put them in a category called search, rather than just events or search_events. The events word here would be redundant.

What if none of these metric types is the right fit?

The current set of metrics the Glean SDKs support is based on known common use cases, but new use cases are discovered all the time.

Please reach out to us on #glean:mozilla.org. If you think you need a new metric type, we have a process for that.

How do I make sure my metric is working?

The Glean SDK has rich support for writing unit tests involving metrics. Writing a good unit test is a large topic, but in general, you should write unit tests for all new telemetry that does the following:

-

Performs the operation being measured.

-

Asserts that metrics contain the expected data, using the

testGetValueAPI on the metric. -

Where applicable, asserts that no errors are recorded, such as when values are out of range, using the

testGetNumRecordedErrorsAPI.

In addition to unit tests, it is good practice to validate the incoming data for the new metric on a pre-release channel to make sure things are working as expected.

Adding the metric to the metrics.yaml file

The metrics.yaml file defines the metrics your application or library will send.

They are organized into categories.

The overall organization is:

# Required to indicate this is a `metrics.yaml` file

$schema: moz://mozilla.org/schemas/glean/metrics/2-0-0

toolbar:

click:

type: event

description: |

Event to record toolbar clicks.

metadata:

tags:

- Interaction

notification_emails:

- CHANGE-ME@example.com

bugs:

- https://bugzilla.mozilla.org/123456789/

data_reviews:

- http://example.com/path/to/data-review

expires: 2019-06-01 # <-- Update to a date in the future

double_click:

...

Refer to the metrics YAML registry format for a full reference

on the metrics.yaml file structure.

Using the metric from your code

The reference documentation for each metric type goes into detail about using each metric type from your code.

Note that all Glean metrics are write-only. Outside of unit tests, it is impossible to retrieve a value from the Glean SDK's database. While this may seem limiting, this is required to:

- enforce the semantics of certain metric types (e.g. that Counters can only be incremented).

- ensure the lifetime of the metric (when it is cleared or reset) is correctly handled.

Capitalization

One thing to note is that we try to adhere to the coding conventions of each language wherever possible, so the metric name and category in the metrics.yaml (which is in snake_case) may be changed to some other case convention, such as camelCase, when used from code.

Event extras and labels are never capitalized, no matter the target language.

Category and metric names in the metrics.yaml are in snake_case,

but given the Kotlin coding standards defined by ktlint,

these identifiers must be camelCase in Kotlin.

For example, the metric defined in the metrics.yaml as:

views:

login_opened:

...

is accessible in Kotlin as:

import org.mozilla.yourApplication.GleanMetrics.Views

GleanMetrics.Views.loginOpened...

Category and metric names in the metrics.yaml are in snake_case,

but given the Swift coding standards defined by swiftlint,

these identifiers must be camelCase in Swift.

For example, the metric defined in the metrics.yaml as:

views:

login_opened:

...

is accessible in Kotlin as:

GleanMetrics.Views.loginOpened...

Category and metric names in the metrics.yaml are in snake_case, which matches the PEP8 standard, so no translation is needed for Python.

Given the Rust coding standards defined by

clippy,

identifiers should all be snake_case.

This includes category names which in the metrics.yaml are dotted.snake_case:

compound.category:

metric_name:

...

In Rust this becomes:

use firefox_on_glean::metrics;

metrics::compound_category::metric_name...

JavaScript identifiers are customarily camelCase.

This requires transforming a metric defined in the metrics.yaml as:

compound.category:

metric_name:

...

to a form useful in JS as:

import * as compoundCategory from "./path/to/generated/files/compoundCategory.js";

compoundCategory.metricName...

Firefox Desktop has

Coding Style Guidelines

for both C++ and JS.

This results in, for a metric defined in the metrics.yaml as:

compound.category:

metric_name:

...

an identifier that looks like:

C++

#include "mozilla/glean/SomeMetrics.h"

mozilla::glean::compound_category::metric_name...

JavaScript

Glean.compoundCategory.metricName...

Unit testing Glean metrics

In order to support unit testing inside of client applications using the Glean SDK, a set of testing API functions have been included. The intent is to make the Glean SDKs easier to test 'out of the box' in any client application it may be used in. These functions expose a way to inspect and validate recorded metric values within the client application. but are restricted to test code only. (Outside of a testing context, Glean APIs are otherwise write-only so that it can enforce semantics and constraints about data).

Example of using the test API

In order to enable metrics testing APIs in each SDK, Glean must be reset and put in testing mode. For documentation on how to do that, refer to Initializing - Testing API.

Check out full examples of using the metric testing API on each Glean SDK. All examples omit the step of resetting Glean for tests to focus solely on metrics unit testing.

// Record a metric value with extra to validate against

GleanMetrics.BrowserEngagement.click.record(

BrowserEngagementExtras(font = "Courier")

)

// Record more events without extras attached

BrowserEngagement.click.record()

BrowserEngagement.click.record()

// Retrieve a snapshot of the recorded events

val events = BrowserEngagement.click.testGetValue()!!

// Check if we collected all 3 events in the snapshot

assertEquals(3, events.size)

// Check extra key/value for first event in the list

assertEquals("Courier", events.elementAt(0).extra["font"])

// Record a metric value with extra to validate against

GleanMetrics.BrowserEngagement.click.record([.font: "Courier"])

// Record more events without extras attached

BrowserEngagement.click.record()

BrowserEngagement.click.record()

// Retrieve a snapshot of the recorded events

let events = BrowserEngagement.click.testGetValue()!

// Check if we collected all 3 events in the snapshot

XCTAssertEqual(3, events.count)

// Check extra key/value for first event in the list

XCTAssertEqual("Courier", events[0].extra?["font"])

from glean import load_metrics

metrics = load_metrics("metrics.yaml")

# Record a metric value with extra to validate against

metrics.url.visit.add(1)

# Check if we collected any events into the 'click' metric

assert metrics.url.visit.test_get_value() is not Null

# Retrieve a snapshot of the recorded events

assert 1 == metrics.url.visit.test_get_value()

Validating the collected data

It is worth investing time when instrumentation is added to the product to understand if the data looks reasonable and expected, and to take action if it does not. It is important to highlight that an automated rigorous test suite for testing metrics is an important precondition for building confidence in newly collected data (especially business-critical ones).

The following checklist could help guide this validation effort.

-

Before releasing the product with the new data collection, make sure the data looks as expected by generating sample data on a local machine and inspecting it on the Glean Debug View(see the debugging facilities):

a. Is the data showing up in the correct ping(s)?

b. Does the metric report the expected data?

c. If exercising the same path again, is it expected for the data to be submitted again? And does it?

-

As users start adopting the version of the product with the new data collection (usually within a few days of release), the initial data coming in should be checked, to understand how the measurements are behaving in the wild:

a. Does this organically-sent data satisfy the same quality expectations the manually-sent data did in Step 1?

b. Is the metric showing up correctly in the Glean Dictionary?

c. Is there any new error being reported for the new data points? If so, does this point to an edge case that should be documented and/or fixed in the code?

d. As the first three or four days pass, distributions will converge towards their final shapes. Consider extreme values; are there a very high number of zero/minimum values when there shouldn't be, or values near what you would realistically expect to be the maximum (e.g. a timespan for a single day that is reporting close to 86,400 seconds)? In case of oddities in the data, how much of the product population is affected? Does this require changing the instrumentation or documenting?

How to annotate metrics without changing the source code?

Data practitioners that lack familiarity with YAML or product-specific development workflows can still document any discovered edge-cases and anomalies by identifying the metric in the Glean Dictionary and initiate adding commentary from the metric page.

- After enough data is collected from the product population, are the expectations from the previous points still met?

Does the product support multiple release channels?

In case of multiple distinct product populations, the above checklist should be ideally run against all of them. For example, in case of Firefox, the checklist should be run for the Nightly population first, then on the other channels as the collection moves across the release trains.

Error reporting

The Glean SDKs record the number of errors that occur when metrics are passed invalid data or are otherwise used incorrectly.

This information is reported back in special labeled counter metrics in the glean.error category.

Error metrics are included in the same pings as the metric that caused the error.

Additionally, error metrics are always sent in the metrics ping ping.

The following categories of errors are recorded:

invalid_value: The metric value was invalid.invalid_label: The label on a labeled metric was invalid.invalid_state: The metric caught an invalid state while recording.invalid_overflow: The metric value to be recorded overflows the metric-specific upper range.invalid_type: The metric value is not of the expected type. This error type is only recorded by the Glean JavaScript SDK. This error may only happen in dynamically typed languages.

For example, if you had a string metric and passed it a string that was too long:

MyMetrics.stringMetric.set("this_string_is_longer_than_the_limit_for_string_metrics")

The following error metric counter would be incremented:

Glean.error.invalidOverflow["my_metrics.string_metric"].add(1)

Resulting in the following keys in the ping:

{

"metrics": {

"labeled_counter": {

"glean.error.invalid_overflow": {

"my_metrics.string_metric": 1

}

}

}

}

If you have a debug build of the Glean SDK, details about the errors being recorded are included in the logs. This detailed information is not included in Glean pings.

The Glean JavaScript SDK provides a slightly different set of metrics and pings

If you are looking for the metrics collected by Glean.js, refer to the documentation over on the

@mozilla/glean.jsrepository.

Metrics

This document enumerates the metrics collected by this project using the Glean SDK. This project may depend on other projects which also collect metrics. This means you might have to go searching through the dependency tree to get a full picture of everything collected by this project.

Pings

all-pings

These metrics are sent in every ping.

All Glean pings contain built-in metrics in the ping_info and client_info sections.

In addition to those built-in metrics, the following metrics are added to the ping:

| Name | Type | Description | Data reviews | Extras | Expiration | Data Sensitivity |

|---|---|---|---|---|---|---|

| glean.client.annotation.experimentation_id | string | An experimentation identifier derived and provided by the application for the purpose of experimentation enrollment. | Bug 1848201 | never | 1 | |

| glean.error.invalid_label | labeled_counter | Counts the number of times a metric was set with an invalid label. The labels are the category.name identifier of the metric. | Bug 1499761 | never | 1 | |

| glean.error.invalid_overflow | labeled_counter | Counts the number of times a metric was set a value that overflowed. The labels are the category.name identifier of the metric. | Bug 1591912 | never | 1 | |

| glean.error.invalid_state | labeled_counter | Counts the number of times a timing metric was used incorrectly. The labels are the category.name identifier of the metric. | Bug 1499761 | never | 1 | |

| glean.error.invalid_value | labeled_counter | Counts the number of times a metric was set to an invalid value. The labels are the category.name identifier of the metric. | Bug 1499761 | never | 1 | |

| glean.ping.uploader_capabilities | string_list | The list of requested uploader capabilities for the ping this is sent in. Should be the same as the ones defined for that particular ping. This metric is only attached to a ping if it already contains other data. | Bug 1976479 | never | 1 | |

| glean.restarted | event | Recorded when the Glean SDK is restarted. Only included in custom pings that record events. For more information, please consult the Custom Ping documentation. | Bug 1716725 | never | 1 |

baseline

This is a built-in ping that is assembled out of the box by the Glean SDK.

See the Glean SDK documentation for the baseline ping.

This ping is sent if empty.

This ping includes the client id.

Data reviews for this ping:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1512938#c3

- https://bugzilla.mozilla.org/show_bug.cgi?id=1599877#c25

Bugs related to this ping:

Reasons this ping may be sent:

-

active: The ping was submitted when the application became active again, which includes when the application starts. In earlier versions, this was calledforeground.*Note*: this ping will not contain the `glean.baseline.duration` metric. -

dirty_startup: The ping was submitted at startup, because the application process was killed before the Glean SDK had the chance to generate this ping, before becoming inactive, in the last session.*Note*: this ping will not contain the `glean.baseline.duration` metric. -

inactive: The ping was submitted when becoming inactive. In earlier versions, this was calledbackground.

All Glean pings contain built-in metrics in the ping_info and client_info sections.

In addition to those built-in metrics, the following metrics are added to the ping:

| Name | Type | Description | Data reviews | Extras | Expiration | Data Sensitivity |

|---|---|---|---|---|---|---|

| glean.baseline.duration | timespan | The duration of the last foreground session. | Bug 1512938 | never | 1, 2 | |

| glean.validation.pings_submitted | labeled_counter | A count of the built-in pings submitted, by ping type. This metric appears in both the metrics and baseline pings. - On the metrics ping, the counts include the number of pings sent since the last metrics ping (including the last metrics ping) - On the baseline ping, the counts include the number of pings send since the last baseline ping (including the last baseline ping) Note: Previously this also recorded the number of submitted custom pings. Now it only records counts for the Glean built-in pings. | Bug 1586764 | never | 1 |

deletion-request

This is a built-in ping that is assembled out of the box by the Glean SDK.

See the Glean SDK documentation for the deletion-request ping.

This ping is sent if empty.

This ping includes the client id.

Data reviews for this ping:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1587095#c6

- https://bugzilla.mozilla.org/show_bug.cgi?id=1702622#c2

Bugs related to this ping:

Reasons this ping may be sent:

-

at_init: The ping was submitted at startup. Glean discovered that between the last time it was run and this time, upload of data has been disabled. -

set_upload_enabled: The ping was submitted between Glean init and Glean shutdown. Glean was told after init but before shutdown that upload has changed from enabled to disabled.

All Glean pings contain built-in metrics in the ping_info and client_info sections.

This ping contains no metrics.

glean_client_info

All Glean pings contain built-in metrics in the ping_info and client_info sections.

In addition to those built-in metrics, the following metrics are added to the ping:

| Name | Type | Description | Data reviews | Extras | Expiration | Data Sensitivity |

|---|---|---|---|---|---|---|

| glean.internal.metrics.attribution.campaign | string | The optional attribution campaign. Similar to or the same as UTM campaign. | Bug 1955428 | never | 1 | |

| glean.internal.metrics.attribution.content | string | The optional attribution content. Similar to or the same as UTM content. | Bug 1955428 | never | 1 | |

| glean.internal.metrics.attribution.medium | string | The optional attribution medium. Similar to or the same as UTM medium. | Bug 1955428 | never | 1 | |

| glean.internal.metrics.attribution.source | string | The optional attribution source. Similar to or the same as UTM source. | Bug 1955428 | never | 1 | |

| glean.internal.metrics.attribution.term | string | The optional attribution term. Similar to or the same as UTM term. | Bug 1955428 | never | 1 | |

| glean.internal.metrics.distribution.name | string | The optional distribution name. Can be a partner name, or a distribution configuration preset. | Bug 1955428 | never | 1 |

health

The purpose of the health ping is to transport all of the health

metric information. The health ping is automatically sent when the

application calls Glean initialize before any operations are done on

the data path with a reason of pre_init.

Previously a second ping with reason post_init was sent.

This reason has been removed in Glean v66.2.0.

This ping includes the client id.

Data reviews for this ping:

Bugs related to this ping:

Reasons this ping may be sent:

pre_init: The ping was submitted before Glean initialization with the state of Glean's data directories prior to rkv opening files on the data path.

All Glean pings contain built-in metrics in the ping_info and client_info sections.

In addition to those built-in metrics, the following metrics are added to the ping:

| Name | Type | Description | Data reviews | Extras | Expiration | Data Sensitivity |

|---|---|---|---|---|---|---|

| glean.database.rkv_load_error | string | If there was an error loading the RKV database, record it. | Bug 1815253 | never | 1 | |

| glean.database.size | memory_distribution | The size of the database file at startup. | Bug 1656589 | never | 1 | |

| glean.database.write_time | timing_distribution | The time it takes for a write-commit for the Glean database. | Bug 1896193 | never | 1 | |

| glean.error.event_timestamp_clamped | counter | The number of times we had to clamp an event timestamp for exceeding the range of a signed 64-bit integer (9223372036854775807). | Bug 1873482 | 2026-06-30 | 1 | |

| glean.error.io | counter | The number of times we encountered an IO error when writing a pending ping to disk. | Bug 1686233 | never | 1 | |

| glean.error.preinit_tasks_overflow | counter | The number of tasks that overflowed the pre-initialization buffer. Only sent if the buffer ever overflows. In Version 0 this reported the total number of tasks enqueued. | Bug 1609482 | never | 1 | |

| glean.health.data_directory_info | object | Information about the data directories and files used by FOG. Structure is an array of objects, each containing the following properties: - dir_name: The name of the directory. This is the subdirectory name relative to the FOG data directory and should only include "db", "events", and "pending_pings". - dir_exists: Whether the directory exists. This should only be false on the first run of FOG, or if the directory was deleted. - dir_created: The creation time of the directory, in seconds since the unix epoch. If the directory does not exist, this will be null and if the time cannot be determined, it will default to 0. - dir_modified: The last modification time of the directory, in seconds since the unix epoch. If the directory does not exist, this will be null and if the time cannot be determined, it will default to 0. - file_count: The number of files in the directory. If the directory does not exist, this will be 0. - error_message: If there was an error accessing the directory, this will contain a brief description of the error. If there was no error, this will be null. - files: An array of objects, each containing: - file_name: The name of the file. Could be data.safe.bin, events.safe.bin, or A UUID representing the doc-id of a pending ping. - file_created: The creation time of the file, in seconds since the epoch. If the file does not exist, this will be null and if the time cannot be determined, it will default to 0. - file_modified: The last modification time of the file, in seconds since the epoch. If the file does not exist, this will be null and if the time cannot be determined, it will default to 0. - file_size: The size of the file in bytes. This can be just about any size but a 0 value indicates the file is empty. - error_message: If there was an error accessing the file, this will contain a brief description of the error. If there was no error, this will be null. | Bug 1982711 | never | 1 | |

| glean.health.exception_state | string | An exceptional state was detected upon trying to load the database. Valid options are: - empty-db - regen-db - c0ffee-in-db - client-id-mismatch | Bug 1994757 | never | 1 | |

| glean.health.file_read_error | labeled_counter | Count of different errors that happened when trying to read the client_id.txt file from disk. | Bug 1994757 |

| never | 1 |

| glean.health.file_write_error | labeled_counter | Count of different errors that happened when trying to write the client_id.txt file to disk. | Bug 1994757 |

| never | 1 |

| glean.health.init_count | counter | A running count of how many times the Glean SDK has been initialized. | Review 1 | never | 1 | |

| glean.health.recovered_client_id | uuid | A client_id recovered from a client_id.txt file on disk. Only expected to have a value for the exception states empty-db, c0ffee-in-db and client-id-mismatch. See exception_state for different exception states when this can happen. | Bug 1994757 | never | 1 | |

| glean.upload.deleted_pings_after_quota_hit | counter | The number of pings deleted after the quota for the size of the pending pings directory or number of files is hit. Since quota is only calculated for the pending pings directory, and deletion request ping live in a different directory, deletion request pings are never deleted. | Bug 1601550 | never | 1 | |

| glean.upload.discarded_exceeding_pings_size | memory_distribution | The size of pings that exceeded the maximum ping size allowed for upload. | Bug 1597761 | never | 1 | |

| glean.upload.in_flight_pings_dropped | counter | How many pings were dropped because we found them already in-flight. | Bug 1816401 | never | 1 | |

| glean.upload.missing_send_ids | counter | How many ping upload responses did we not record as a success or failure (in glean.upload.send_success or glean.upload.send_failue, respectively) due to an inconsistency in our internal bookkeeping? | Bug 1816400 | never | 1 | |

| glean.upload.pending_pings | counter | The total number of pending pings at startup. This does not include deletion-request pings. | Bug 1665041 | never | 1 | |

| glean.upload.pending_pings_directory_size | memory_distribution | The size of the pending pings directory upon initialization of Glean. This does not include the size of the deletion request pings directory. | Bug 1601550 | never | 1 | |

| glean.upload.ping_upload_failure | labeled_counter | Counts the number of ping upload failures, by type of failure. This includes failures for all ping types, though the counts appear in the next successfully sent metrics ping. | Bug 1589124 |

| never | 1 |

| glean.upload.send_failure | timing_distribution | Time needed for a failed send of a ping to the servers and getting a reply back. | Bug 1814592 | never | 1 | |

| glean.upload.send_success | timing_distribution | Time needed for a successful send of a ping to the servers and getting a reply back | Bug 1814592 | never | 1 | |

| glean.validation.pings_submitted | labeled_counter | A count of the built-in pings submitted, by ping type. This metric appears in both the metrics and baseline pings. - On the metrics ping, the counts include the number of pings sent since the last metrics ping (including the last metrics ping) - On the baseline ping, the counts include the number of pings send since the last baseline ping (including the last baseline ping) Note: Previously this also recorded the number of submitted custom pings. Now it only records counts for the Glean built-in pings. | Bug 1586764 | never | 1 | |

| glean.validation.shutdown_dispatcher_wait | timing_distribution | Time waited for the dispatcher to unblock during shutdown. Most samples are expected to be below the 10s timeout used. | Bug 1828066 | never | 1 | |